Projects

XR4DRAMA is an EU H2020 project aimed at revolutionizing situational awareness and decision making......

XR4DRAMA

Description:

XR4DRAMA is an EU H2020 project aimed at revolutionizing situational awareness and decision making in crisis scenarios and media production through the use of Extended Reality XR and complementary technologies. The proof of concept initially focuses on disaster and media management with the goal of later applying the insights and systems gained to additional use cases.

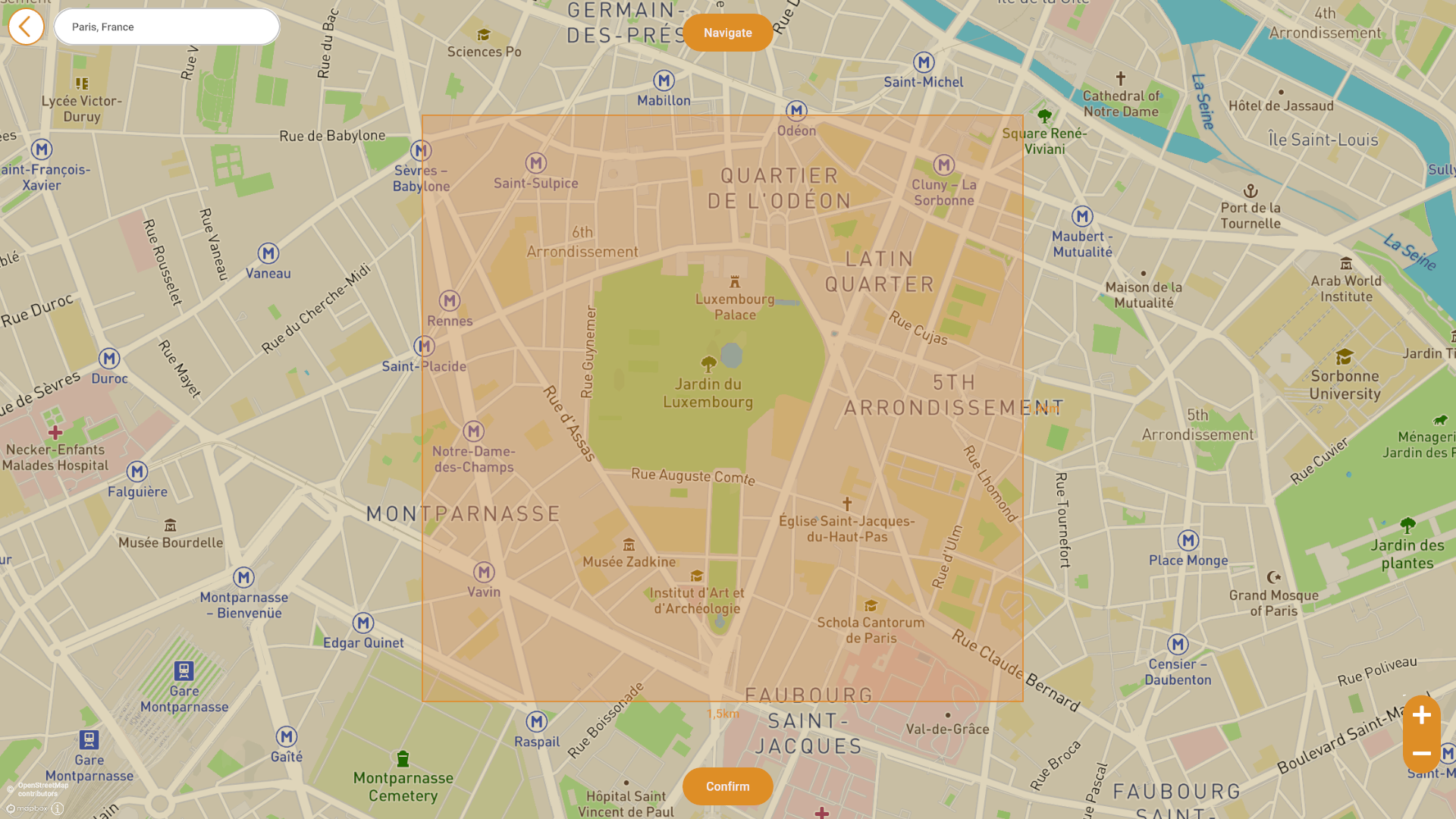

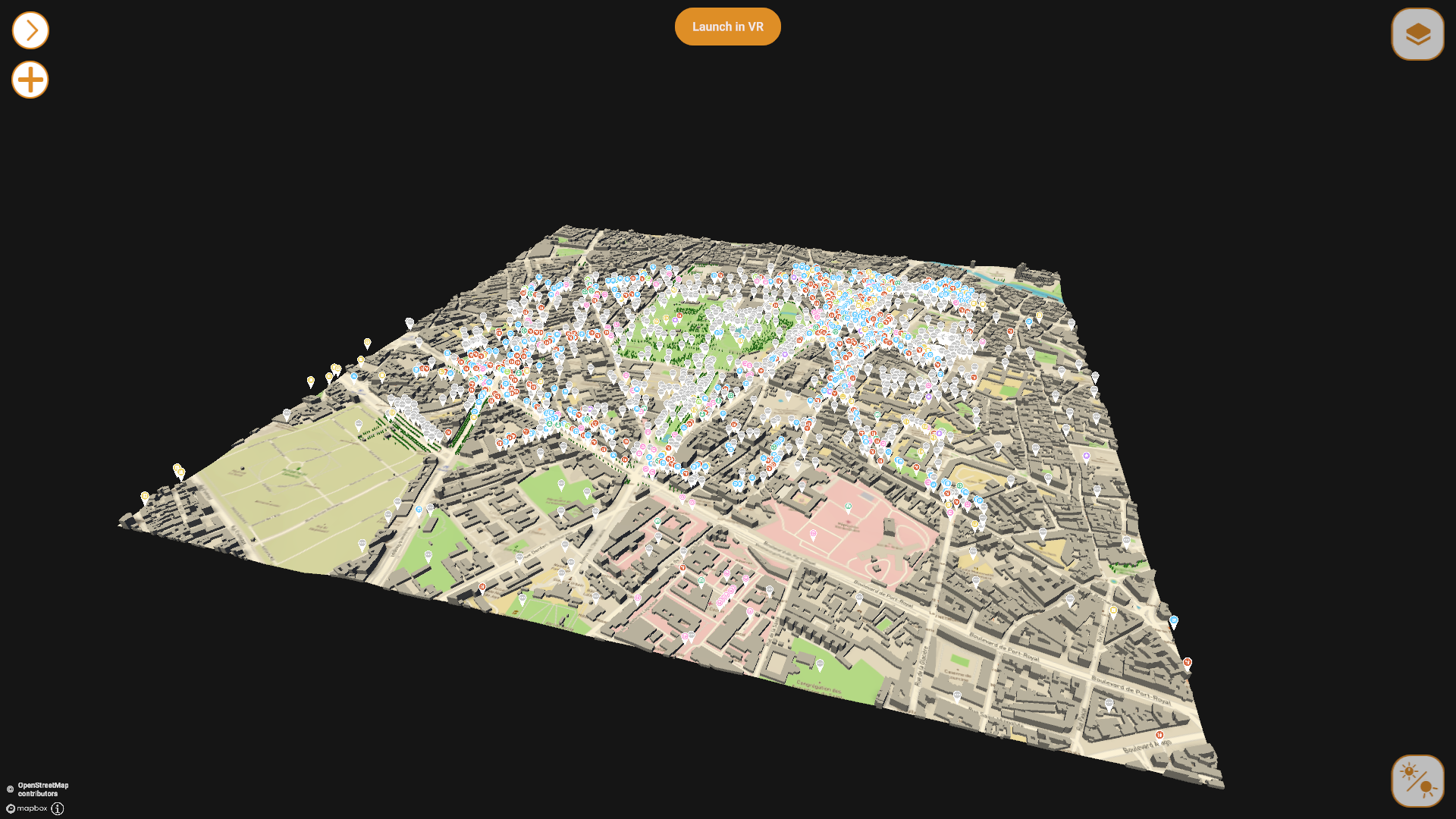

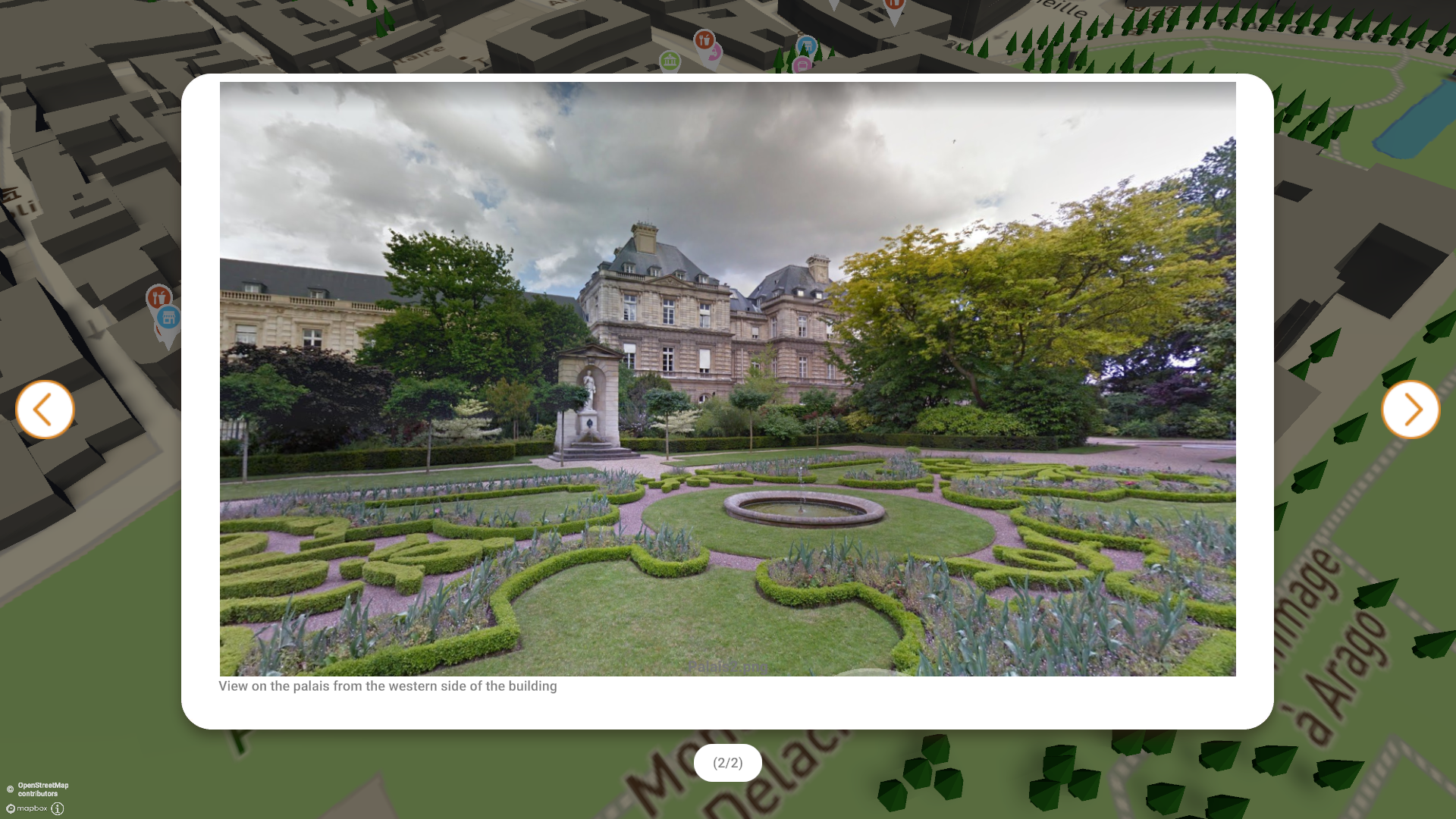

XR4DRAMA adopts a data driven approach: disaster managers first responders journalists and media producers gain access to extensive data ranging from geographic maps and sociographic information cultural context to sensor readings such as temperature humidity and especially water level measurements and flood forecasts. These data are georeferenced and visualized in a central control center system covering locations of interest for media production as well as critical measurement points for disaster response. The application can be used on desktop and mobile devices as well as in Virtual Reality VR. In VR the environment can be experienced within a 3D world created using photogrammetry allowing participants to gain a realistic on site experience and perceive the situation from a lifelike perspective.

A central aspect of XR4DRAMA is distributed situational awareness. The georeferenced display of locations and events must be continuously updated. To achieve this the control center provides interfaces between remote managers and field personnel enabling real time exchange of information and continuous updates within the VR environment. This creates a shared dynamic understanding of the situation that allows for safe and efficient real time decision making.

In short XR4DRAMA ensures that all relevant digital information is centrally collected presented in an immersive XR environment and continuously updated ranging from disaster measurement data to media production applications thereby creating a distributed data driven situational awareness that significantly enhances planning decision making and implementation of actions in critical scenarios.

Tasks:

My team took full responsibility for the conception architecture and implementation of the control center which serves as the core component of XR4DRAMA. The software visualizes georeferenced data in real time showing not only the locations relevant to media production but also the precise positions of field personnel. Each team member is represented on the map with a stress level indicator allowing operators to instantly identify who is at risk or requires additional support.

To enhance situational awareness we integrated social media content using AI models. Posts are automatically scanned relevant information is classified and displayed directly on the map. Users can filter by different categories such as hazard alerts. Additionally operators gain insights into people in danger or other critical scenarios through AI analyzed images. The system prioritizes these inputs based on urgency ensuring that operators always have the most important data at a glance.

The control center operator can use the software to assign tasks to field personnel provide them with additional information and simultaneously monitor their workload. This ensures that emergency teams are not overburdened during operations.

Another key feature of the architecture is the ability to support multiple users in a decentralized manner. Through voice chat within VR field teams can virtually meet exchange insights and collaboratively analyze the situation. For media production I developed a virtual drone: the drone can be launched directly within the VR environment alongside its standard flight mode giving teams an accurate sense of camera angles and fields of view. Additionally we implemented a day night lighting simulation that allows planners to account for factors such as shadows light conditions and their impact on footage even during the early planning phase.

Skills & Technologies:

Screenshots:

Videos:

IMOCO4.E is an EU funded research project that develops edge to cloud based motion control......

IMOCO4.E

Description:

IMOCO4.E is an EU funded research project that develops edge to cloud based motion control intelligence for human in the loop systems. The goal is to deliver a reference platform that combines AI and digital twin toolchains with modular building blocks for robust manufacturing applications. The platform is designed to excel through energy efficiency simple configuration traceability and cybersecurity and will be tested across a range of application fields including semiconductor and packaging industries industrial robotics and healthcare systems to demonstrate its benefits throughout the entire production and usage chain. At its core IMOCO4.E leverages novel sensor technologies model based approaches and the Industrial Internet of Things to make mechatronic systems smarter more adaptable more reliable and more powerful while aligning their performance with physical constraints. The project evaluates requirements for the future smart manufacturing infrastructure in Europe and collaborates with the Industry 4 E cluster and other Lighthouse projects to define best practice methods for the European research and innovation ecosystem. We were involved in the practical implementation of two pilot projects

Tasks:

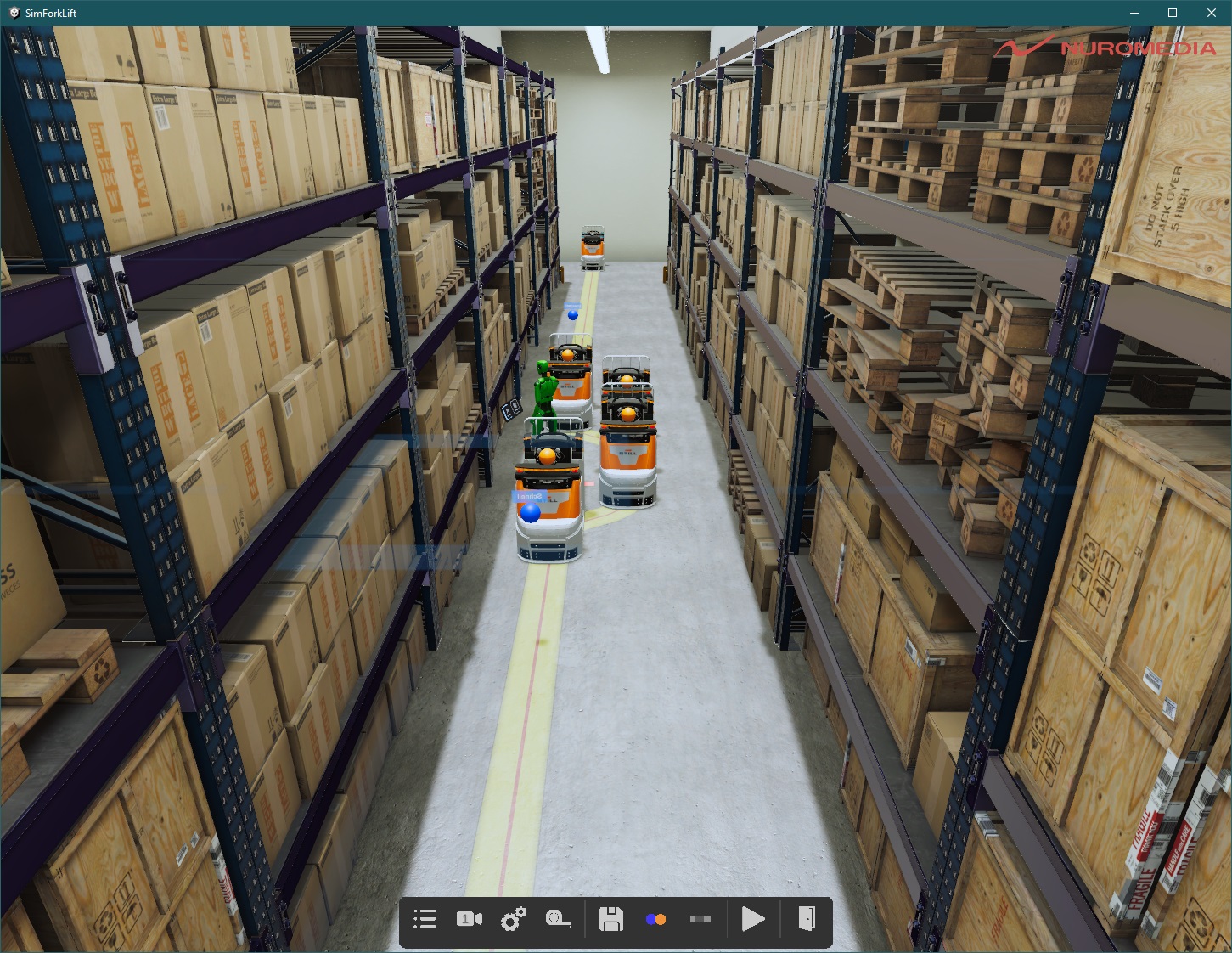

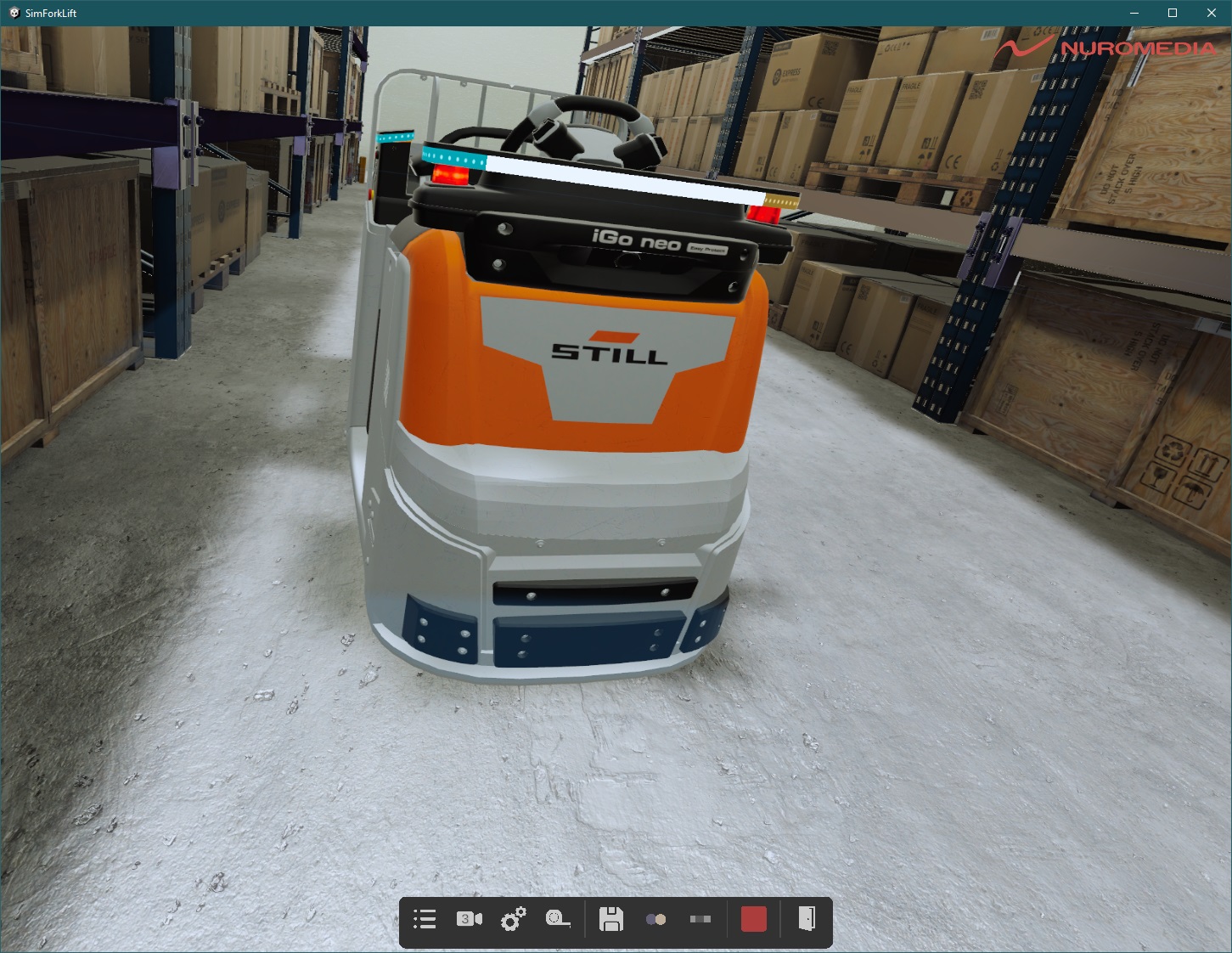

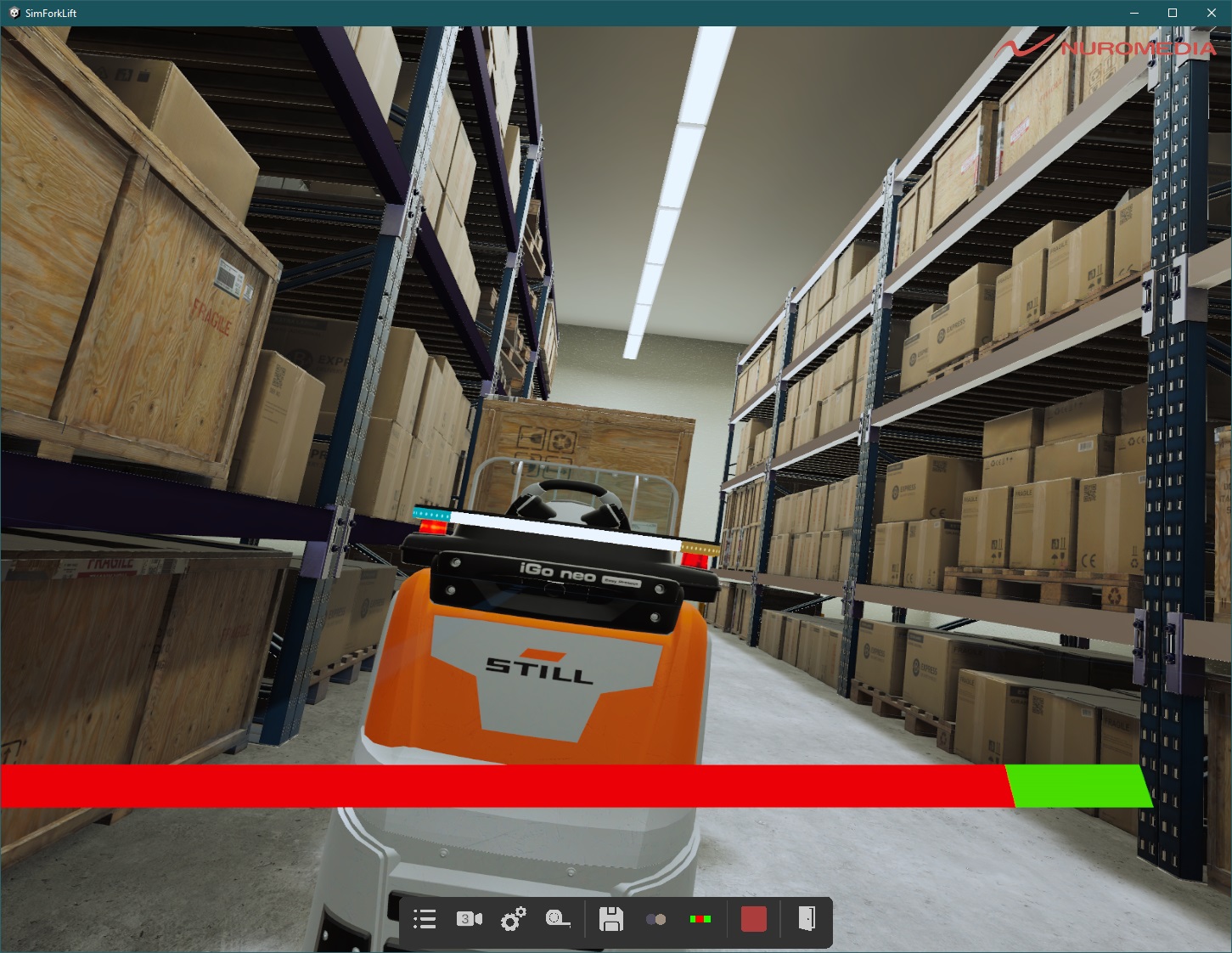

Pilot 1 – iGo neo

I developed a VR digital twin of the STILL iGo neo that could be controlled via a PlayStation 4 controller a gaming steering wheel or an Ethernet connection attached directly to the vehicle. This approach was expanded into a testing environment where users could define braking points and evasive routes and simulate them in a safe virtual setting. The data collected during these simulations fed into an AI training pipeline designed to optimize regulatory compliant but humanly uncomfortable actions such as sudden braking. In addition I built a second application that replicates the entire warehouse including an equipment editor and generates synthetic micro Doppler radar data for AI training. This application was equipped with a ROS 2 interface making it multi user capable and enabling control of the iGo neo through MATLAB or Gazebo. The goal was to validate trained AI models in a secure testing environment particularly during machine human interaction. At the same time I supported the development of routing algorithms for the planning tool that schedules tasks and deployment of autonomous forklifts.

Pilot 2 – ADAT3 XF Digital Twin

In the second pilot project I conducted the requirements analysis and worked closely with the developer to design the software architecture focusing on the division of data flow simulation models and real time interfaces. I contributed to implementation particularly addressing issues related to physical calculations and real time performance which created critical bottlenecks during integration of the digital twin into the existing simulation pipeline. Through targeted optimizations and the implementation of more efficient algorithms we improved simulation accuracy and reduced response times of the control software.

Skills & Technologies:

Screenshots:

Videos:

The HECOF project aims to drive systemic change by promoting innovation in higher education......

HECOF

Description:

The HECOF project aims to drive systemic change by promoting innovation in higher education teaching and supporting national educational reforms. This will be achieved through the development and testing of an innovative personalized and adaptive teaching method that leverages digital data from students learning activities in immersive environments and applies computational analysis techniques from data science and artificial intelligence.

A central element of the project is the creation of a user friendly authoring tool that enables instructors to create their own VR learning content without requiring advanced programming skills. This tool will feature a modular structure and support diverse pedagogical scenarios ranging from exploratory learning materials to interactive simulations. Through intuitive interfaces templates and customizable components it ensures that educators can flexibly adapt to the needs of their students. Additionally the integration of analytical tools allows for continuous improvement of learning content based on usage and learner data.

Tasks:

As part of the implementation of the authoring tool for VR learning content I was significantly involved in its conceptual design and technical realization. My responsibilities included conducting a thorough requirements analysis in close collaboration with instructors and students from the National Technical University of Athens (NTUA) and the Politecnico di Milano (POLIMI). The goal was to translate specific pedagogical needs from the fields of chemical engineering NTUA extraction of compounds from olive tree leaves and biotechnology POLIMI assembly and simulation of a bioreactor into clear functional software requirements.

Building on this I provided guidance on suitable development strategies and designed the overall software architecture of the tool. In my role as technical project lead I managed an interdisciplinary team of developers and 3D artists coordinated workflows and ensured both the technical and design implementation of the content.

The technical realization was carried out using state of the art technologies including Unity Virtual Reality Artificial Intelligence including Large Language Models and adaptive AI Text to Speech TTS RESTful APIs JSON real time rendering data compression and cryptographic methods. By combining these technologies we created a powerful yet flexible system capable of delivering immersive and interactive learning content at a high pedagogical and technical standard.

Skills & Technologies:

XR4ED is a digital platform centered on two key offerings First an online marketplace where......

XR4ED

Description:

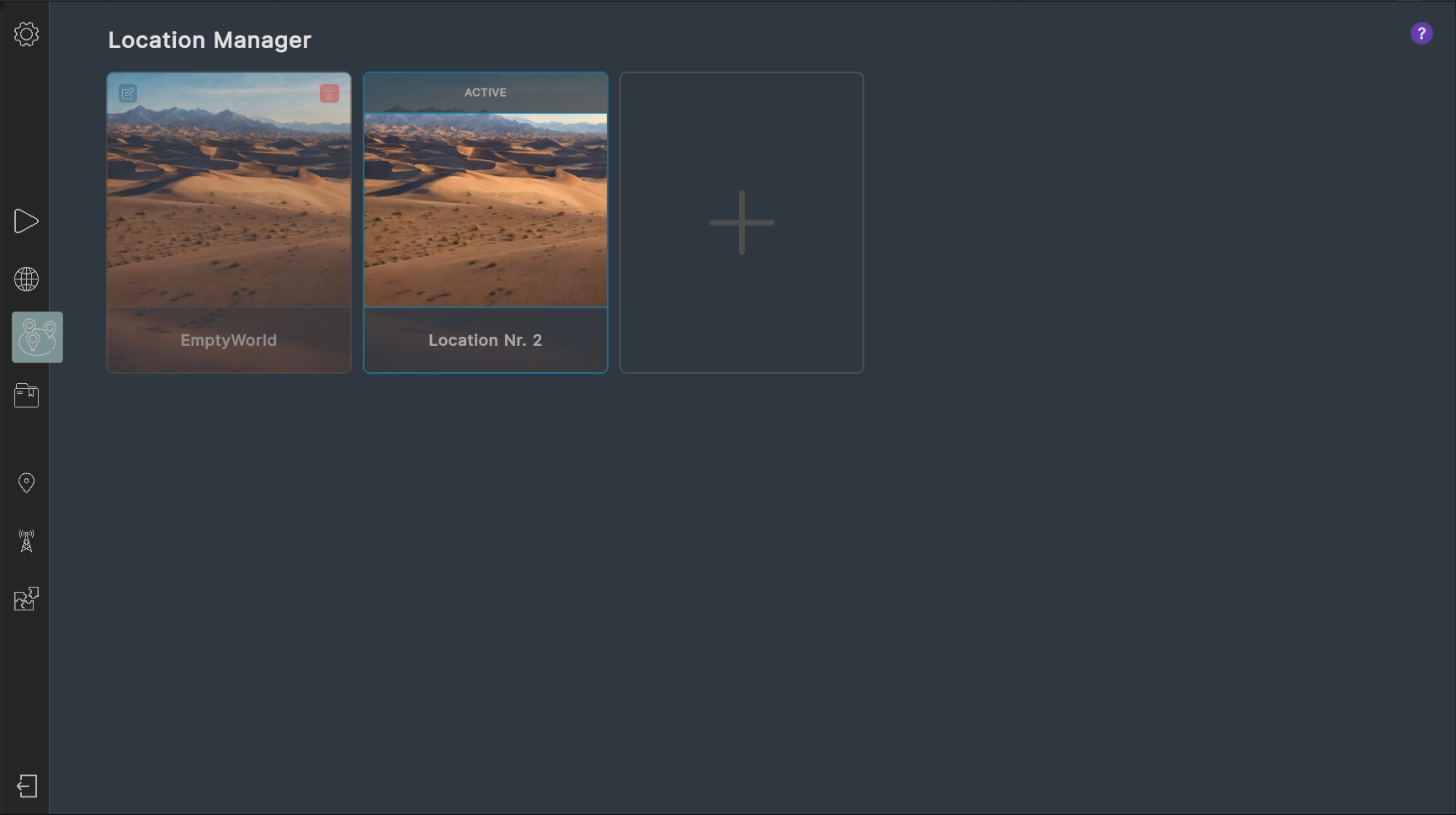

XR4ED is a digital platform centered on two key offerings First an online marketplace where educators schools and companies can purchase ready made XR learning modules interactive 3D models instructional videos practical assignments and all necessary tools and resources for creating XR content In parallel the platform provides a comprehensive authoring tool that enables educational professionals to design edit and publish VR learning units without requiring advanced programming skills The tool delivers a drag and drop interface a scene generator interactive object placement and script templates that enable the full creation of VR learning materials and support the implementation of pedagogical concepts into immersive experiential learning environments

Over the course of one year twenty diverse teams from educational institutions research groups and industrial partners actively used the platform Continuous feedback led to ongoing expansion adaptation and improvement of the features design and usability of the authoring tool After completion of this testing phase the results were deployed and utilized by thousands of students and learners

Tasks:

I designed the authoring tool myself from the overall architecture through the UX concept to data management and other key components Together with my team I took full responsibility for its implementation developing a broad range of system components to address diverse user groups and skill levels

– No code drag and drop solutions for educators who want to create ready to use VR learning modules immediately

– Node editor for technically skilled users that enables the addition of simple logic and interactions without writing any code

– Compiler module for building and executing custom scripts giving developers full flexibility to realize complex pedagogical concepts

In addition to development I jointly with my team assumed ongoing platform support and technical assistance ensuring users always receive prompt help when encountering questions or issues

Further technical details can be found in the article on the World Builder which represents the outcome of this development

Skills & Technologies:

SENSE (Strengthening Cities and Enhancing Neighbourhood Sense of Belonging) builds a Europe wide......

SENSE

Description:

SENSE (Strengthening Cities and Enhancing Neighbourhood Sense of Belonging) builds a Europe wide network of virtual worlds that replicate real cities in the metaverse. In alignment with the EU initiative Smart Communities the project leverages the EU data infrastructure and strictly follows interoperability standards to develop practical use cases for the CitiVerse platform. The goal is to connect citizens and municipalities through VR AR experiences in urban spaces strengthening not only technical but above all social architectural green and cultural dimensions of urban life. This creates an immersive connecting environment that sustainably enhances the sense of belonging across European cities.

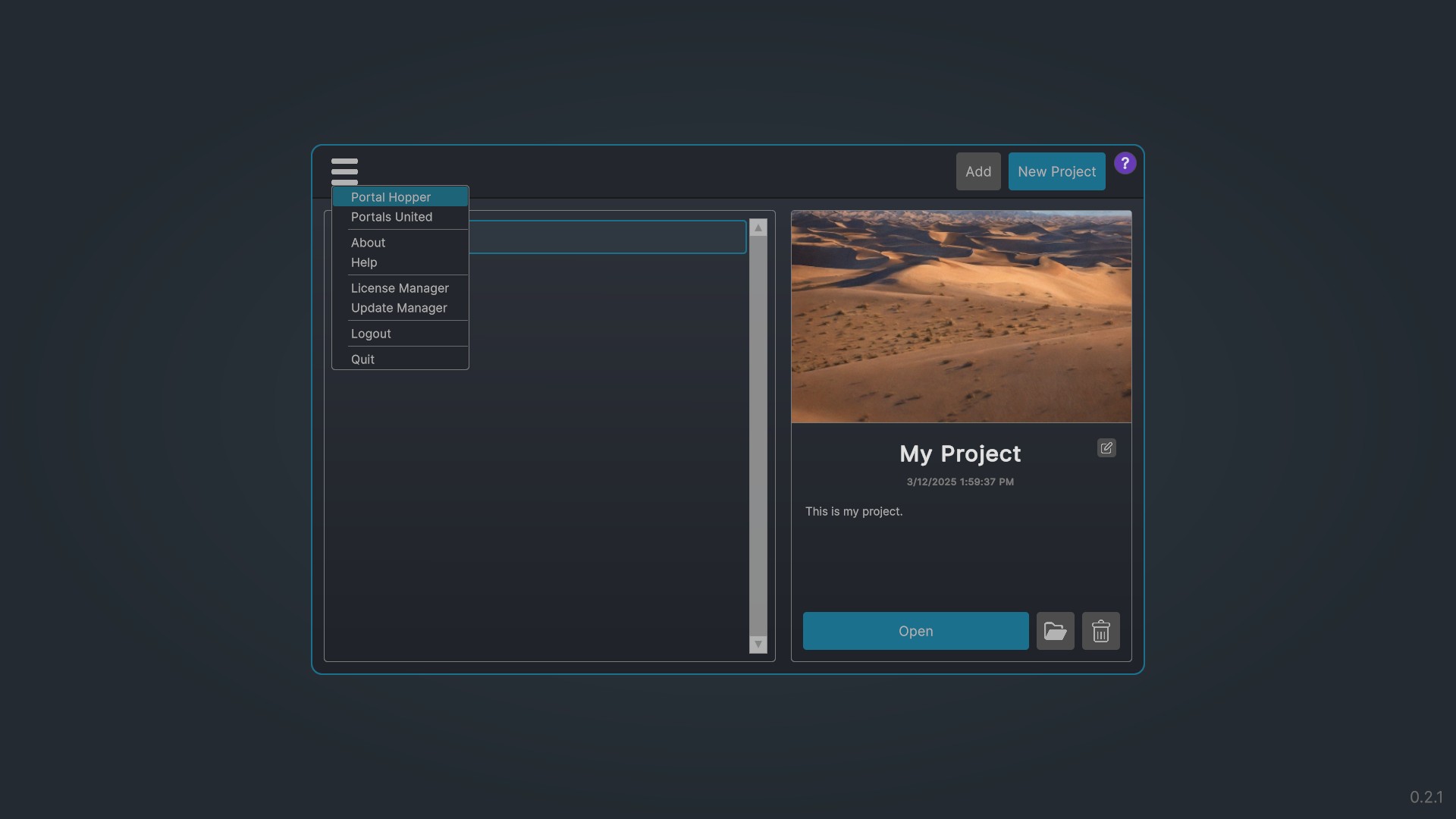

Tasks:

Our team was responsible for the conception and implementation of the VR AR applications within SENSE. To this end we developed a specialized data streaming protocol for the Portal Hopper that enables the creation of endless virtual worlds. Through this protocol landscapes buildings and all geolocalized data are continuously streamed and rendered in real time. This establishes a scalable foundation that allows users to move seamlessly through vast dynamically generated environments without requiring extensive pre downloads. This is a crucial step toward maximizing immersion and interoperability within CitiVerse.

Skills & Technologies:

ReliVR is an interdisciplinary research project that leverages virtual reality technologies to......

ReliVR

Description:

ReliVR is an interdisciplinary research project that leverages virtual reality technologies to enhance the treatment of severe depression and complement psychotherapeutic care. As healthcare becomes increasingly digitalized new therapeutic possibilities emerge that can improve treatment outcomes reduce waiting times and address care gaps particularly in structurally underserved regions.

The project develops two complementary solutions For the desktop component a patient web portal was designed offering comprehensive reference materials a calendar for appointment scheduling and a personal journal enabling individuals to independently track and plan their progress. In the VR segment targeted meditation exercises and interactive roleplaying scenarios were implemented These roleplays simulate real life everyday situations such as a conversation with a supervisor at work and a private setting where the patient interacts with a friend in a café Through immersive exposure to these scenarios social competencies are strengthened anxieties are reduced and coping strategies are trained.

Parallel to module development the team identifies depression indicators that enable computer assisted monitoring of treatment progress This allows early detection of relapses and timely implementation of countermeasures Finally the complete system will be evaluated in a clinical setting to validate its usability effectiveness and value for both therapists and patients.

ReliVR thus provides an innovative digital complement to traditional therapies—both in inpatient outpatient and home care contexts—and helps accelerate therapeutic success while effectively preventing relapses.

Tasks:

Our team designed and implemented the entire VR application for the Meta Quest 2 and 3. The system is built on a modular architecture, allowing new therapeutic exercises to be easily added in the future. In addition we developed a recording system that enables sessions to be replayed and analyzed from a third person perspective. I personally programmed the underlying architecture and the modular content loading system. At the same time I was closely involved in partner coordination and consultation and contributed to shaping the scenarios for the therapeutic exercises.

Skills & Technologies:

Screenshots:

The Portal Hopper is a specialized browser for the three dimensional metaverse we call "VRWeb". It......

Portal Hopper

Links:

Description:

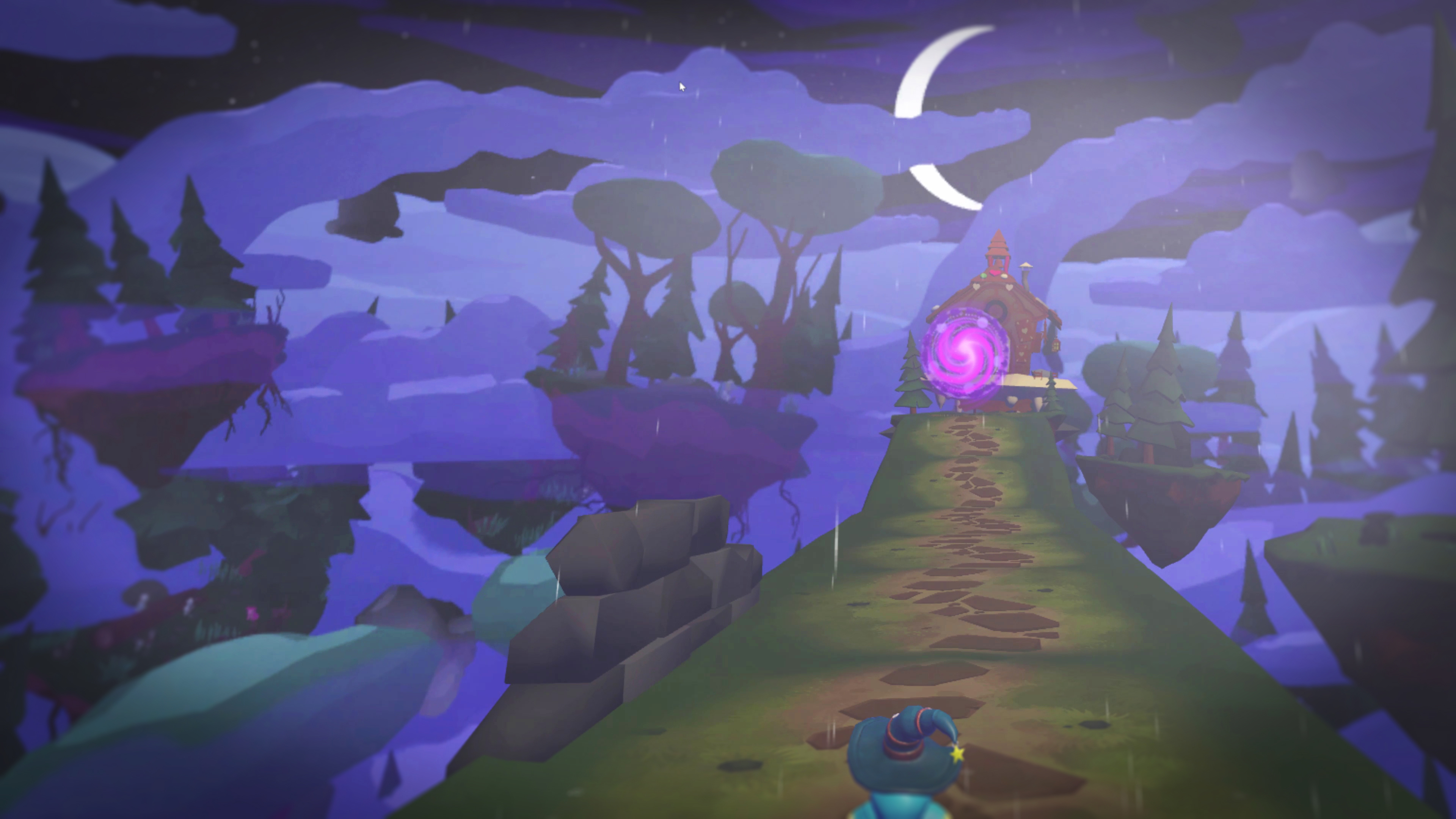

The Portal Hopper is a specialized browser for the three dimensional metaverse we call "VRWeb". It operates on a simple protocol that connects all worlds and locations within a multiverse. A Hopper must be able to interpret VRML files that specify which Hopper should be used and how to reach a particular world after which it can either load the target world directly or launch another Hopper designed to handle it. Additionally it supports executing installers for other hopper aware applications enabling games built on entirely different physics or graphics engines to be seamlessly integrated.

Like a conventional web browser the Portal Hopper enables users to jump between virtual worlds almost as easily as clicking hyperlinks. Any address can be typed in but the most engaging exploration comes through discovery. By tapping or touching a portal—such as a door a corridor or a statue—a user transitions into another world or “domain.” Portals can vary widely in design and do not need to look identical allowing developers full creative freedom.

When moving between locations within a single world the Hopper retains data such as game states or player names but clears all information when jumping to an entirely new world to conserve memory. This ensures a consistent user experience while remaining resource efficient.

To enable maximum inclusion the Portal Hopper can smoothly transition between VR view and first person or third person perspective. Content only needs to be built once using the provided components so that differing interaction paradigms blend seamlessly upon switching modes.

In short the Portal Hopper is the central browser of VRWeb presenting the metaverse as a unified network of interconnected worlds and places—much like the World Wide Web does in the 2D internet—but with the added ability to wander interact and remain secure paid and authenticated through a unified blockchain infrastructure—all within an immersive three dimensional environment.

Tasks:

Together with a colleague I developed the entire Portal Hopper including all system component and module interfaces as well as the OpenXR integration primarily using a pair programming approach. We designed the complete architecture defined the underlying data structures and implemented an initial UX concept ensuring that the Hopper functions efficiently modularity and intuitively from the ground up.

Skills & Technologies:

The software development project "World Builder" is a state of the art WYSIWYG authoring tool......

World Builder

Links:

Description:

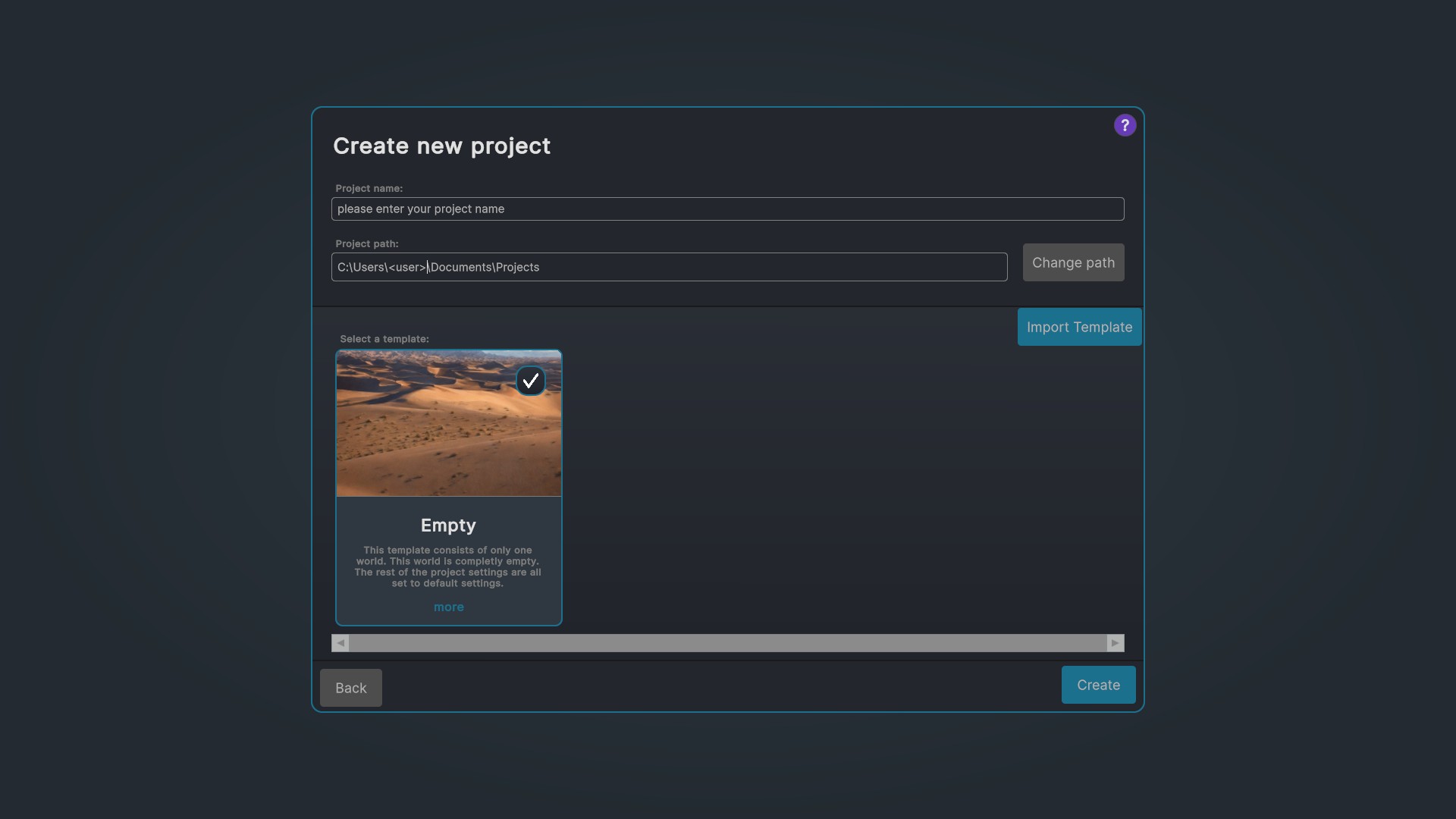

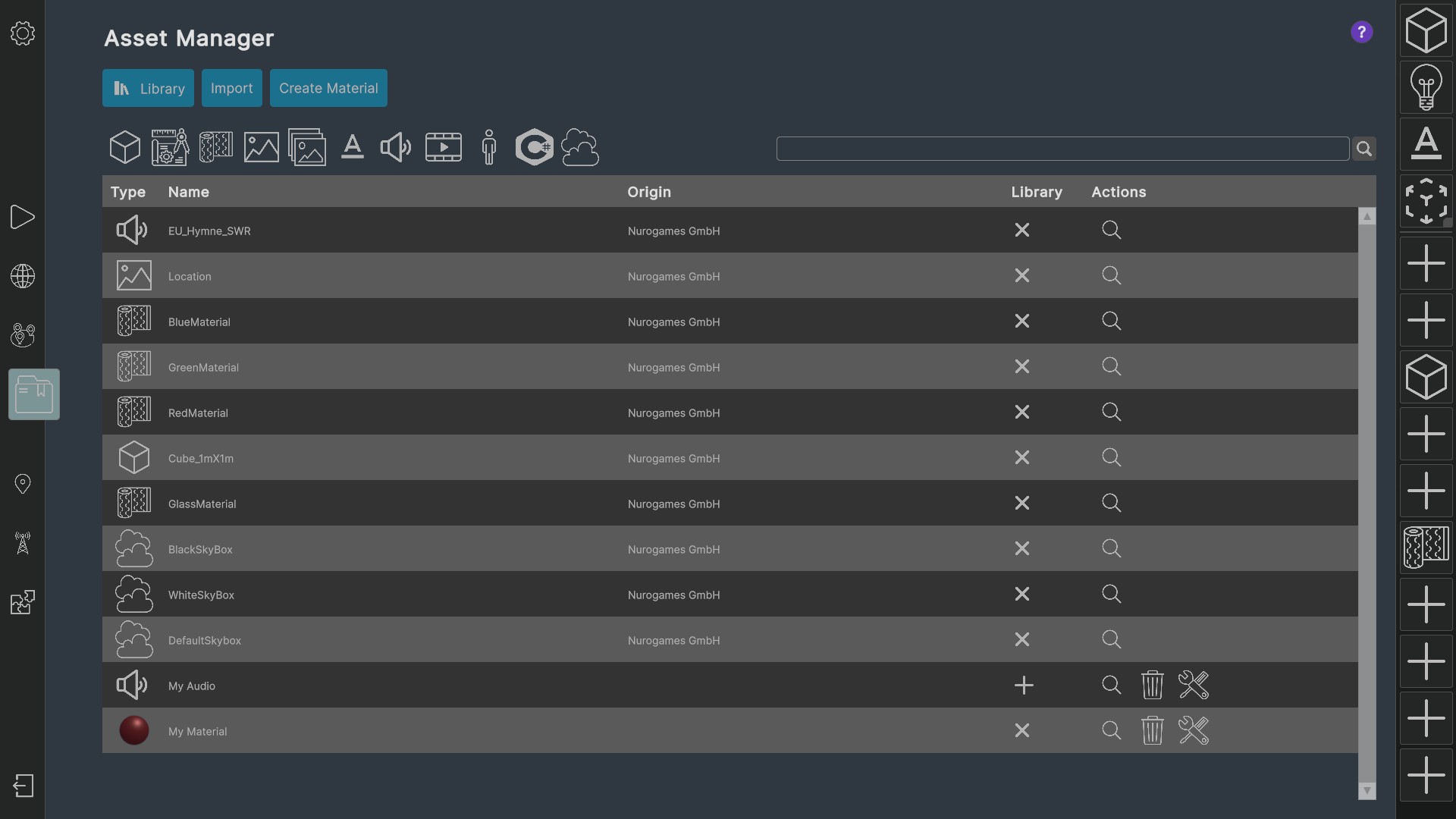

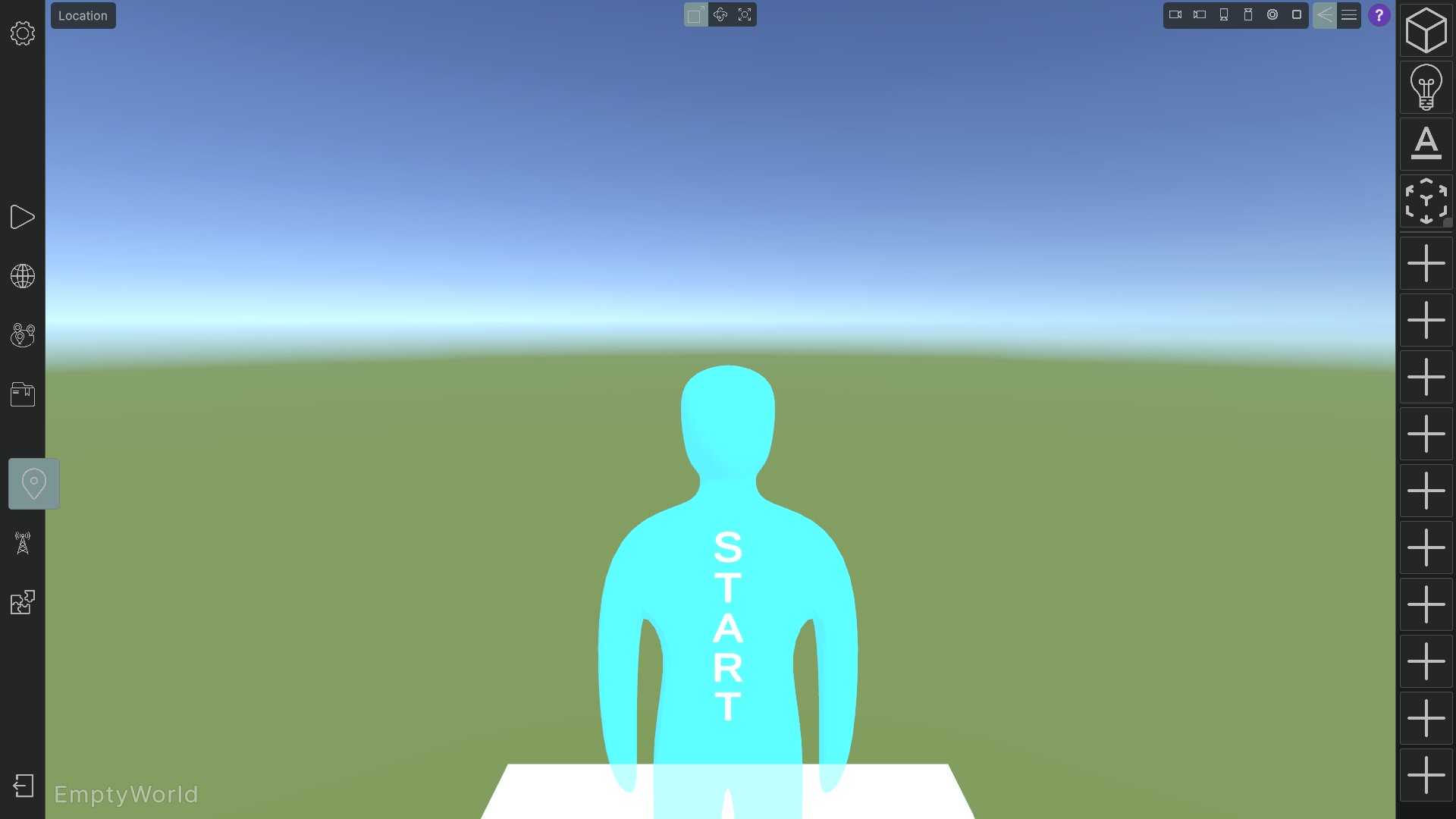

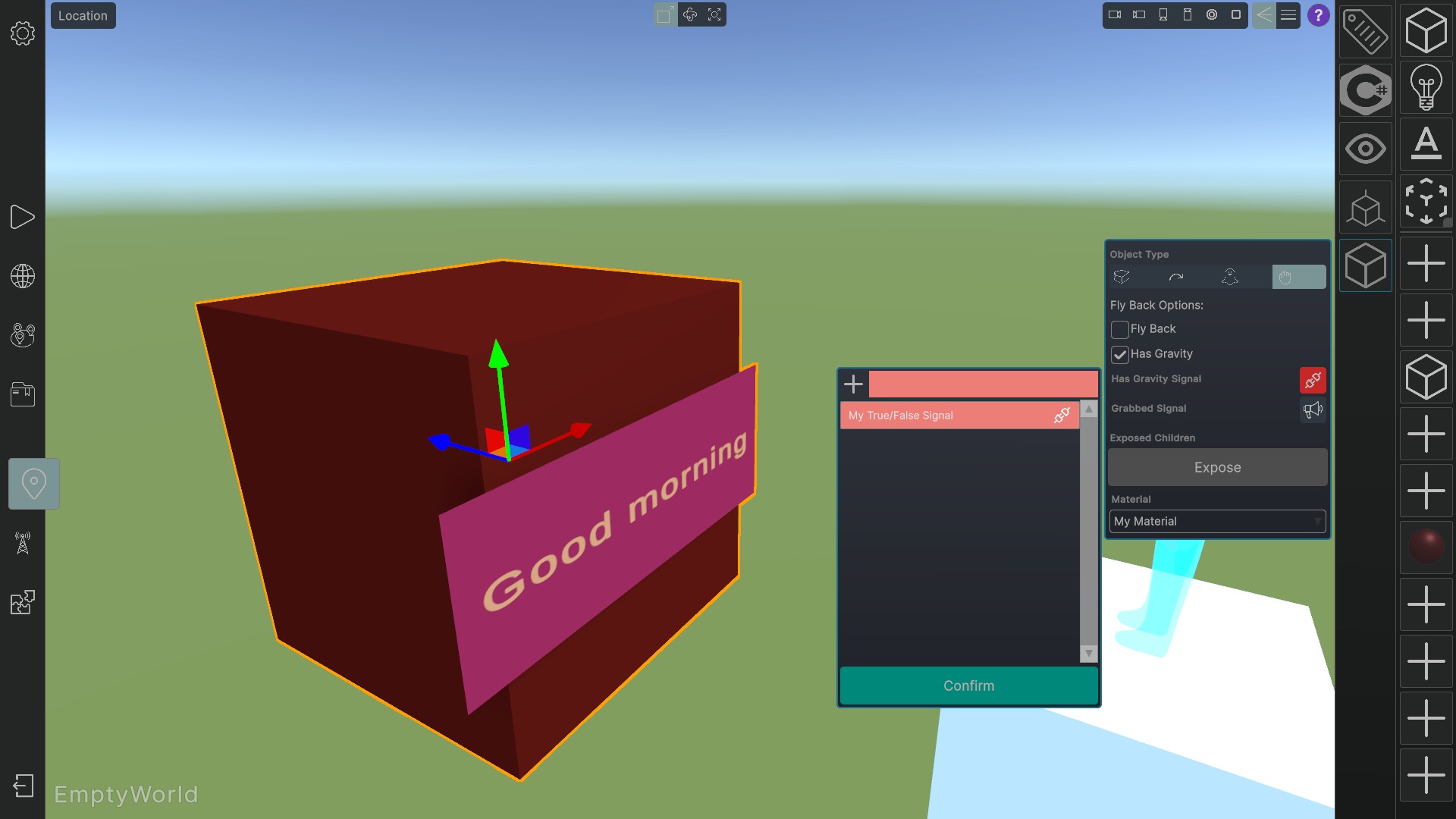

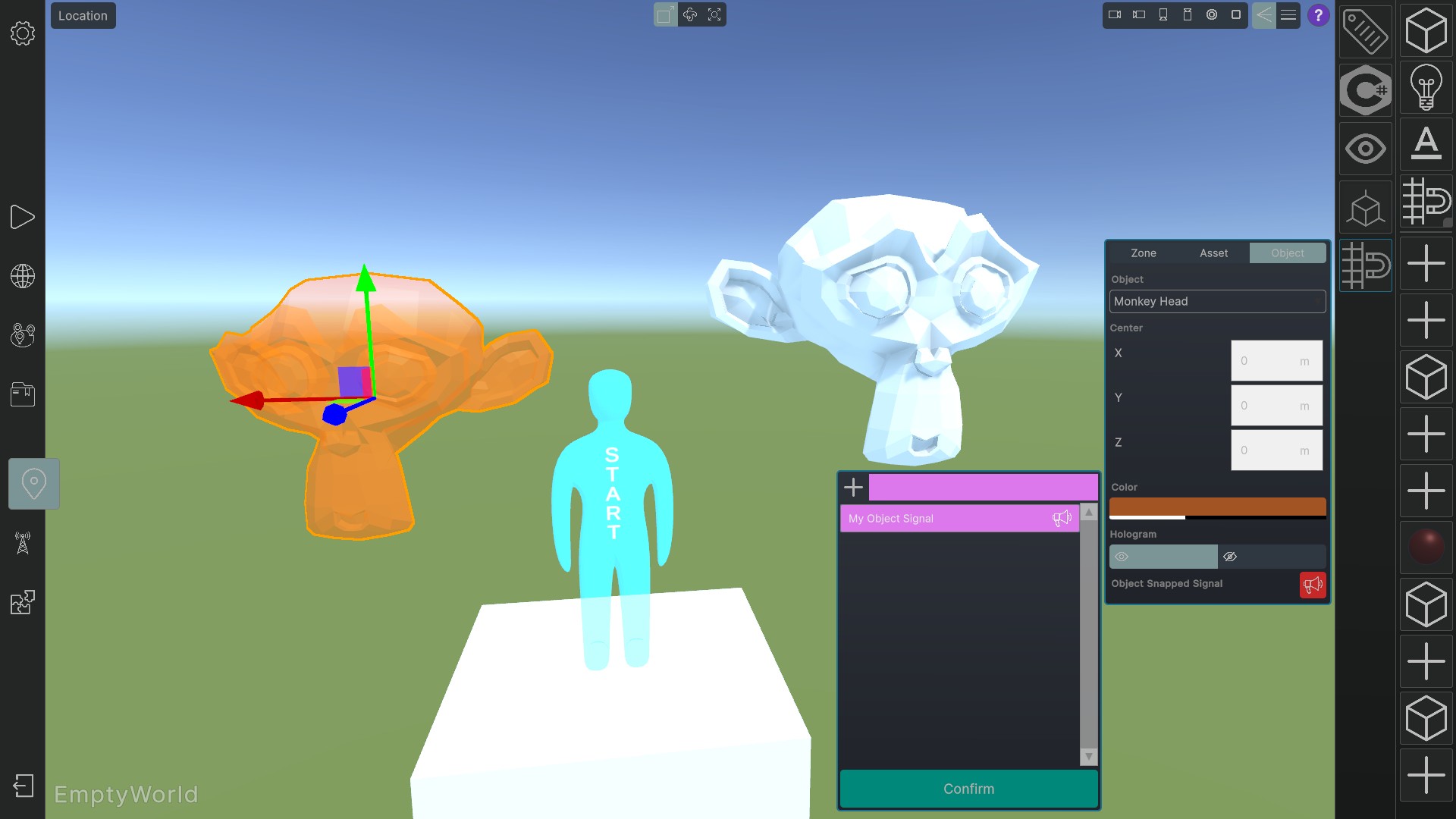

The software development project "World Builder" is a state of the art WYSIWYG authoring tool specifically designed for creating interactive virtual reality environments that can later be played back in the accompanying application Portal Hopper. In numerous projects already the World Builder has demonstrated not only technical maturity but also exceptional adaptability. To date more than two hundred developers have used the tool and several thousand end users have experienced the resulting immersive experiences and learning modules.

A core feature of the World Builder is its white label approach. Through a flexible plugin system the application can be rapidly customized for specific projects: A plugin can completely redefine the look and feel of the authoring interface extend functionality or integrate additional services. This allows the solution to be seamlessly embedded into any existing product line without requiring changes to fundamental structures. In addition the system provides predefined templates for projects locations and logic to simplify onboarding and ensure design consistency.

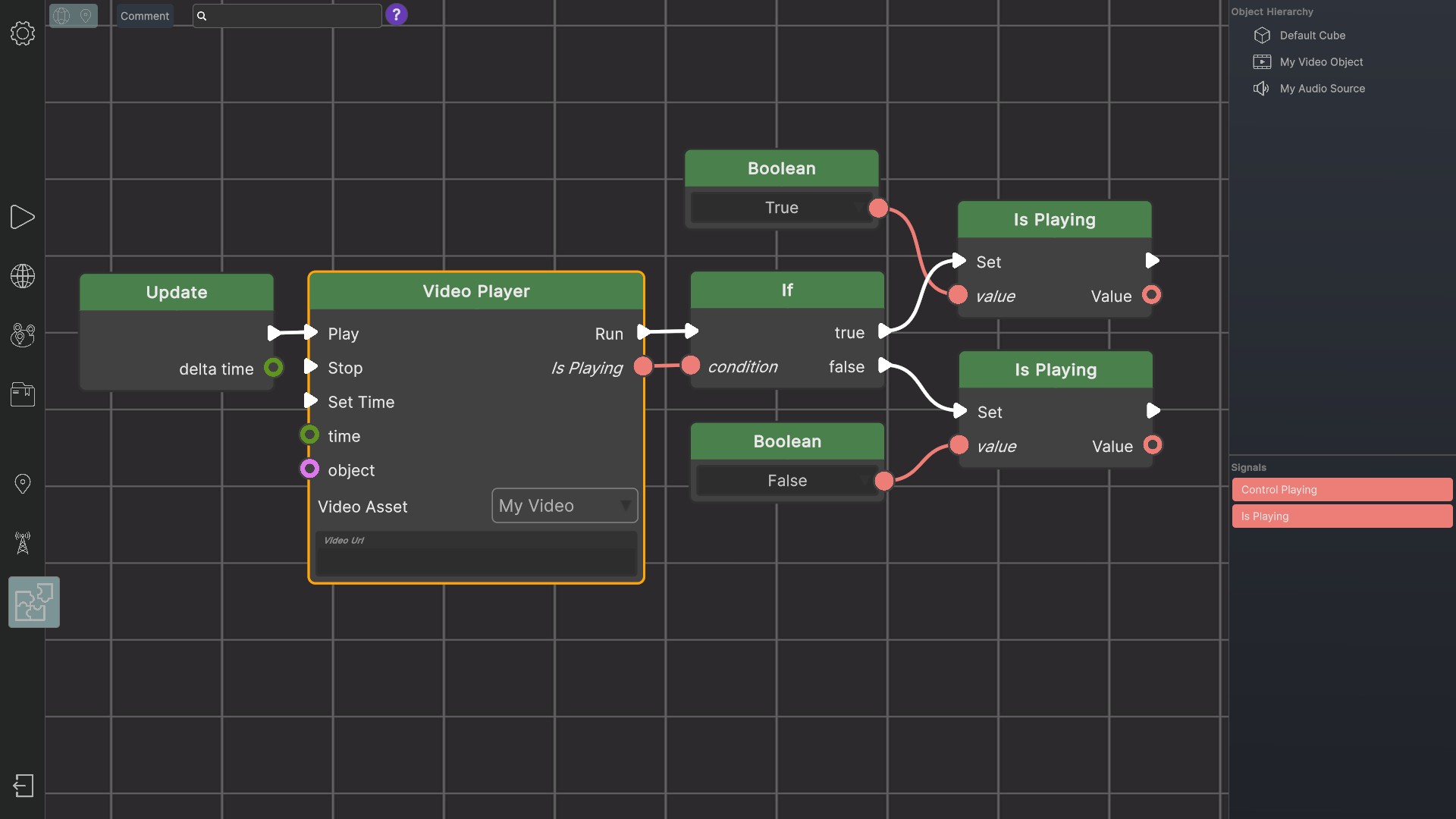

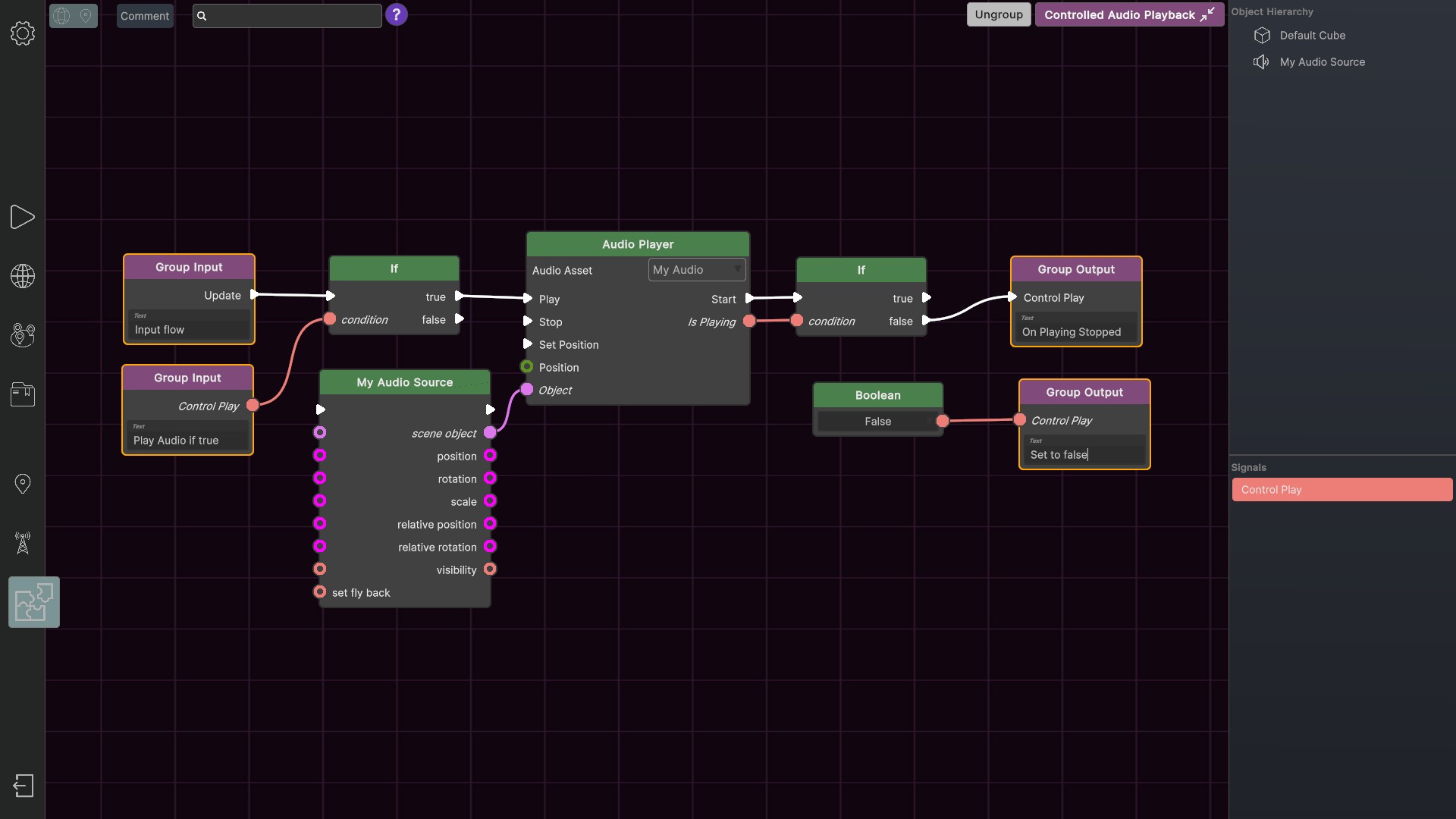

Specific features have been implemented for each of the three primary user groups beginners advanced power users and developers. Beginners benefit from guided wizards that walk them step by step through content creation and a direct linking system that simplifies connections between objects. Power users gain access to a node based logic editor that visualizes and enables editing of complex interactions without requiring direct code input. For developers an integrated C# compiler is available allowing full control over logic and interactivity through custom scripting. All user groups can work with standard image 3D model video and audio formats while certain features are toggled on or off depending on the target audience.

Since conventional scene description formats do not allow seamless integration of custom code a proprietary Scene Description File format was developed. This format enables the definition of all elements in a VR scene including custom logic and is designed to be easily extensible.

An additional milestone is the integration of generative AI into the World Builder via plugins. Through standardized interfaces additional AI services can be effortlessly incorporated for all asset types. Initial implementations of voice control and tool calling have already been completed laying the foundation for future voice driven workflows. Simultaneously an AI powered documentation system is being developed to assist users with questions and reduce technical support demands. This AI solution leverages existing asset data and project information to provide context sensitive help and proactively explain error messages.

Overall the World Builder delivers a fully integrated modular platform that spans the entire journey from idea to finished VR environment. By combining a WYSIWYG editor a plugin based architecture user group specific functions and cutting edge AI support the project sets new standards in the authoring of interactive VR content. It empowers developers designers and non programmers alike to create high quality virtual worlds that integrate seamlessly into the Portal Hopper app while remaining fully compatible with future AI technologies.

Tasks:

In this project I took full ownership of the World Builder’s software architecture from the ground up planning and implementing all layers consistently—from application logic to the persistence layer. At the same time, I led the UI UX design, shaping the entire interface to be intuitive visually appealing and modularly extensible. In close collaboration with my colleagues, I developed the core systems including the plugin framework the C# compiler the node based logic editor and the generative AI integration ensuring that critical knowledge was distributed across the team rather than siloed in a single individual. Additionally I structured the technical support system and introduced a help desk concept that combines both proactive and reactive assistance. My responsibilities extended to the commercialization of the product where I defined and refined the market strategy. Finally I played a key role in drafting project proposals and actively participated in tender processes for new initiatives ensuring the continued expansion of the product portfolio and the targeted evolution of the World Builder.

Skills & Technologies:

Screenshots:

Artificial Intelligence (AI) is increasingly being used in the space sector to generate value......

CALLISTO

Description:

Artificial Intelligence (AI) is increasingly being used in the space sector to generate value enhancing earth observation products from heterogeneous georeferenced data collected by satellites and other sources. Within the CALLISTO project these data are combined using high performance computing with geolocation UAV observations web and social media content and on site sensors. AI methods that can also run at the edge extract semantic knowledge concepts changes activities 3D models and videos and deliver analysis results to end users through non traditional interfaces such as augmented virtual and mixed reality. Upon request a data fusion from multiple sources is provided to enable new VR AR applications for water authorities journalists EU agricultural policy makers and security agencies. Subtasks of the project include monitoring agricultural fields for EU subsidies measuring air quality at varying altitudes analyzing water quality in rivers and lakes and detecting changes along EU borders for enhanced border security.

Tasks:

We developed smartphone apps for end users that overlay collected data using augmented reality. I designed the software architecture implemented an intuitive user interface UX and created visualizations for certain datasets.

Skills & Technologies:

Videos:

The i‑Game project is implemented under the Horizon Europe program with the goal of creating an......

i-Game

Description:

The i‑Game project is implemented under the Horizon Europe program with the goal of creating an open and user friendly platform where developers designers end users and professionals from the cultural and creative sectors can collaboratively develop open source games. The focus lies in leveraging video games as a cultural and economic phenomenon to drive innovation while simultaneously generating positive impacts on social cohesion sustainability and economic growth.

The platform provides a network that brings together experts from diverse backgrounds—from game designers and developers to end users—and offers modern tools for co creating games tailored for mobile devices and virtual reality environments encouraging active participation from all stakeholders. Furthermore it establishes an ethical design culture to ensure responsible gameplay. The platform continuously monitors and evaluates the impact of video games on culture—for example museums—creative industries and the fashion and textile sectors analyzing how online games positively shape well being culture and society. These insights enable the development of new generations of games that enhance individual well being.

The underlying community oriented approach allows for generating fresh ideas collaborations and business models from a diverse pool of participants with particular emphasis placed on artificial intelligence AI. In addition to providing digital tools the platform supports the definition design and implementation of relationships collaborations and game experiences. The consortium composed of organizations with varied backgrounds and specialized expertise ensures an interdisciplinary approach that comprehensively addresses the complex effects of games on innovation sustainability social cohesion and economic growth.

Tasks:

As a developer advisor in the i‑Game project I contributed my expertise as a game developer alongside the needs of the developer stakeholder group to shape the platform strategy. I also participated in drafting policy and regulatory recommendations focused specifically on the use of artificial intelligence in game development and creative processes. In close collaboration with legal experts I addressed key questions surrounding copyright licensing and ethical standards from a developer’s perspective while illustrating current technological capabilities enabling the project to formulate actionable proposals for the European Commission regarding legislation. Concurrently I integrated AI functionalities into the World Builder to lower the entry barrier for creating VR experiences in museums and other cultural institutions providing platform users with a powerful yet accessible AI‑powered toolkit.

Skills & Technologies:

MISTAL (Mitigation of Environmental Impacts on Subclinical and Long Term Health Outcomes) is an......

ASHVIN

Description:

MISTAL (Mitigation of Environmental Impacts on Subclinical and Long Term Health Outcomes) is an interdisciplinary research project that develops a dynamic privacy preserving Health Impact Assessment toolkit to predict how environmental factors will affect future disability and population health outcomes. By integrating environmental socioeconomic geographic and clinical data with lifestyle and individual health parameters drawn from large scale digital population surveys the toolkit employs a federated learning framework that enables seamless integration of heterogeneous data sources without centrally storing any personally identifiable information. Models are trained and validated using real industrial exposure data from steel plants in Taranto southern Italy Rybnik Poland and Flanders Belgium systematically analyzing the effects of air pollutants such as particulate matter nitrogen oxides and sulfur dioxide on subclinical health indicators. The outcome is a validated user friendly HIA toolkit that empowers policy agencies environmental organizations healthcare providers insurance companies and patient advocacy groups to detect long term health consequences of environmental exposures early and design targeted intervention strategies to reduce global disease burden and sustainably improve quality of life in affected regions.

Tasks:

So far I have been actively involved in the MISTAL project solely as a consultant since the specific tasks are not yet imminent. My role focuses on visualizing project data for the general population. In this area I have contributed my expertise collaborated closely with partners to define meaningful data formats and developed several proposals for engaging and comprehensible visualization approaches that ensure both scientific accuracy and accessibility for non expert audiences.

Skills & Technologies:

MindSpaces — Adaptive and Inclusive Spaces for the Future

MindSpaces is an interdisciplinary......

Mindspaces

Description:

MindSpaces — Adaptive and Inclusive Spaces for the Future

MindSpaces is an interdisciplinary EU project that connects artists technologists and architects to establish a new way of working aimed at developing innovative emotional and functional spatial concepts for cities workplaces and living environments. The goal is to create adaptive “MindSpaces” that respond in real time to the emotional aesthetic and societal reactions of users thereby enhancing both usability efficiency and emotional well being. Project partners leverage cutting edge multisensory technologies such as wearable EEG heart rate monitoring galvanic skin response environmental sensing and social media inputs to capture immediate feedback from VR installations and modify artistic elements in real time.

The project operates through an open call system to engage artists from around the world and provide them with residency opportunities. In Barcelona the partner cluster collaborates with the Espronceda organization to attract local artists from the L’Hospitalet region and integrate their creative ideas into digital designs. Renowned partners include digital artists Maurice Benayoun and Refik Anadol as well as Tobias Klein Jean Baptiste Barrière and the Brain Factory Brain Cloud project team who contribute their expertise in public art installations and advanced technological projects.

Concepts and designs are initially developed as 3D models jointly refined by architects and artists. These models serve as the foundation for VR environments that dynamically respond to real time EEG and physiological data from users. Through adaptive algorithms design parameters such as lighting acoustics and spatial layout are continuously adjusted allowing the spaces to immediately address the emotional needs of individuals. This approach enables rapid validation of AI driven design solutions within controlled yet realistic scenarios bridging artistic vision with evidence based spatial design.

Tasks:

I independently developed the applications for both use cases.

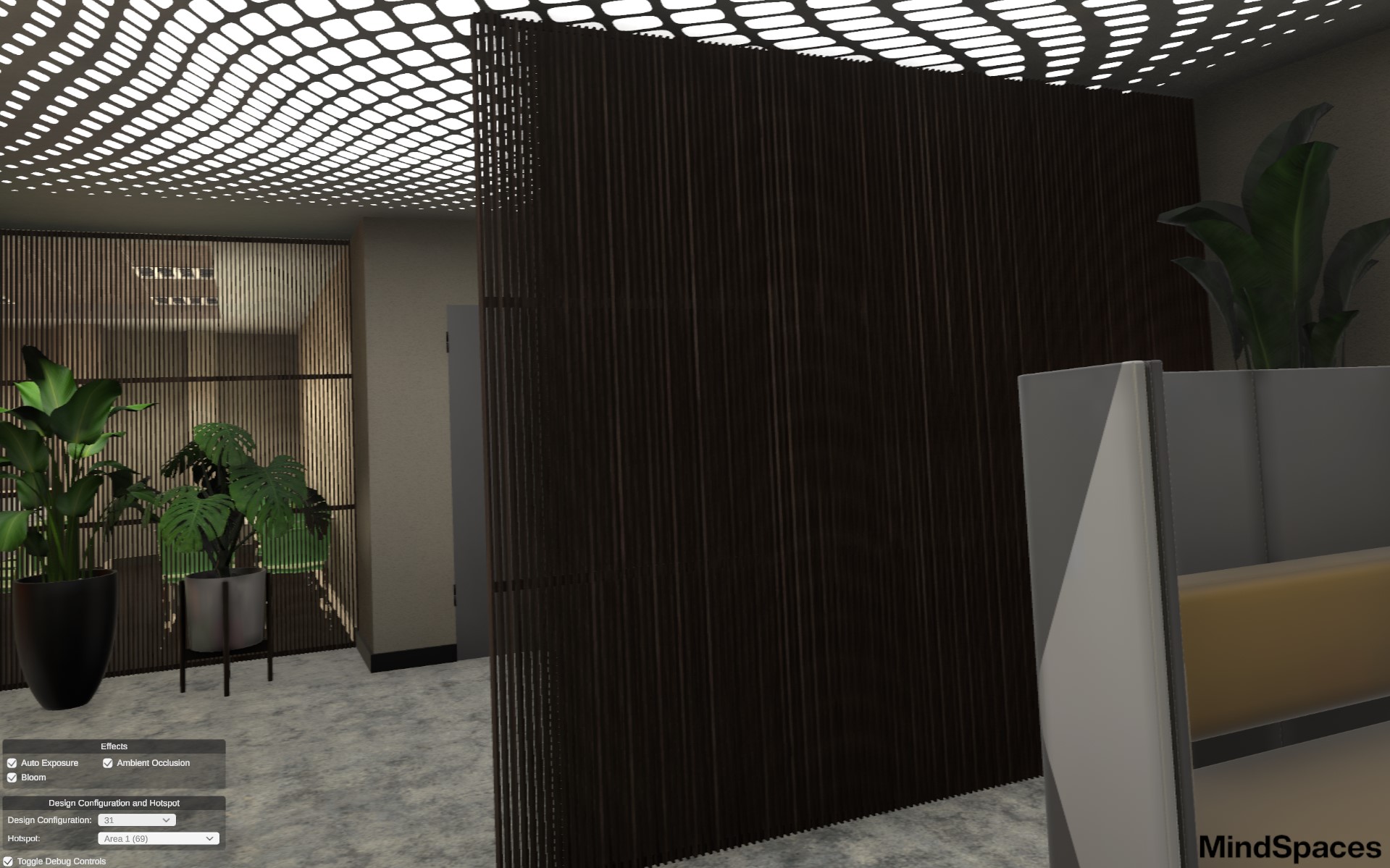

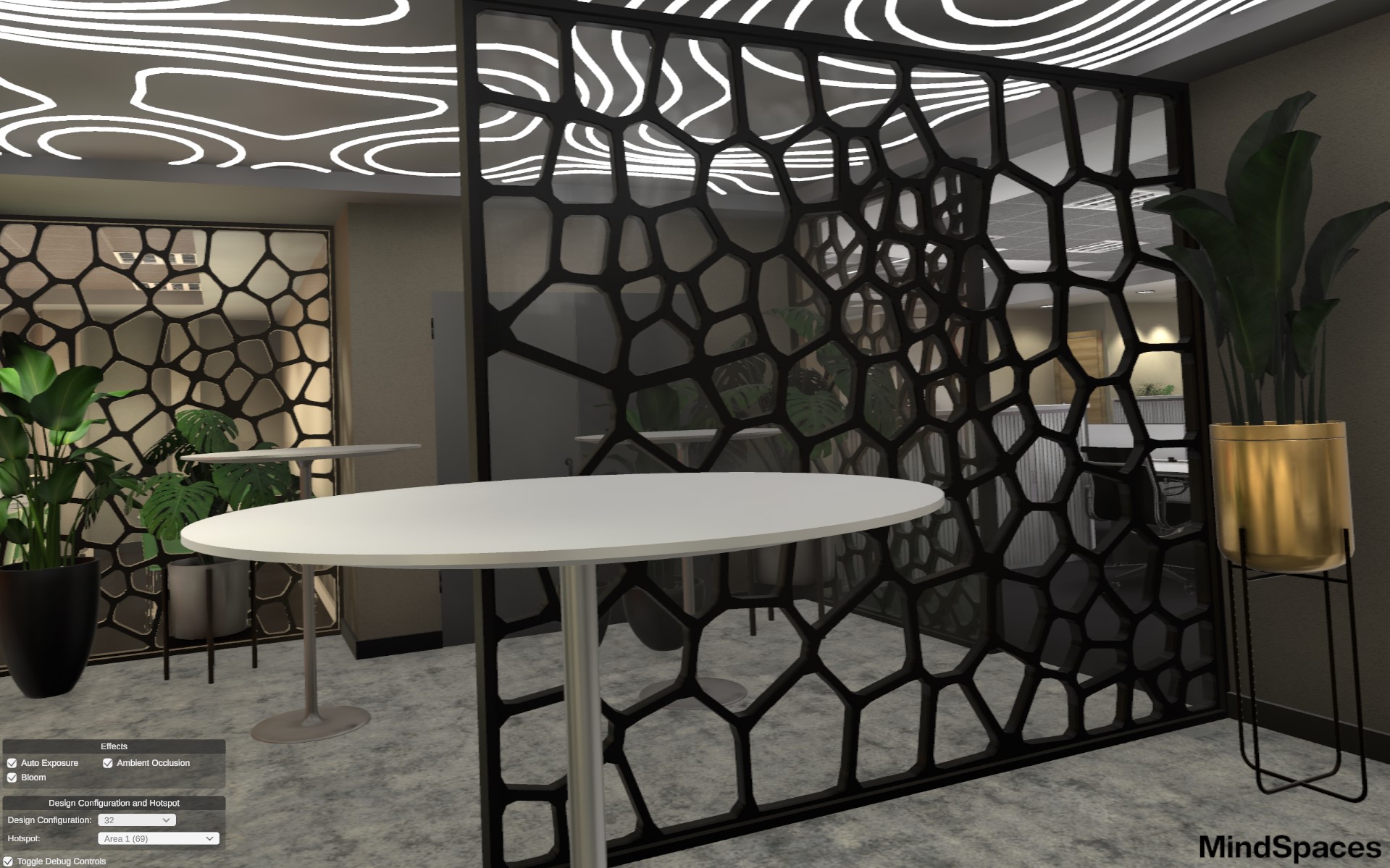

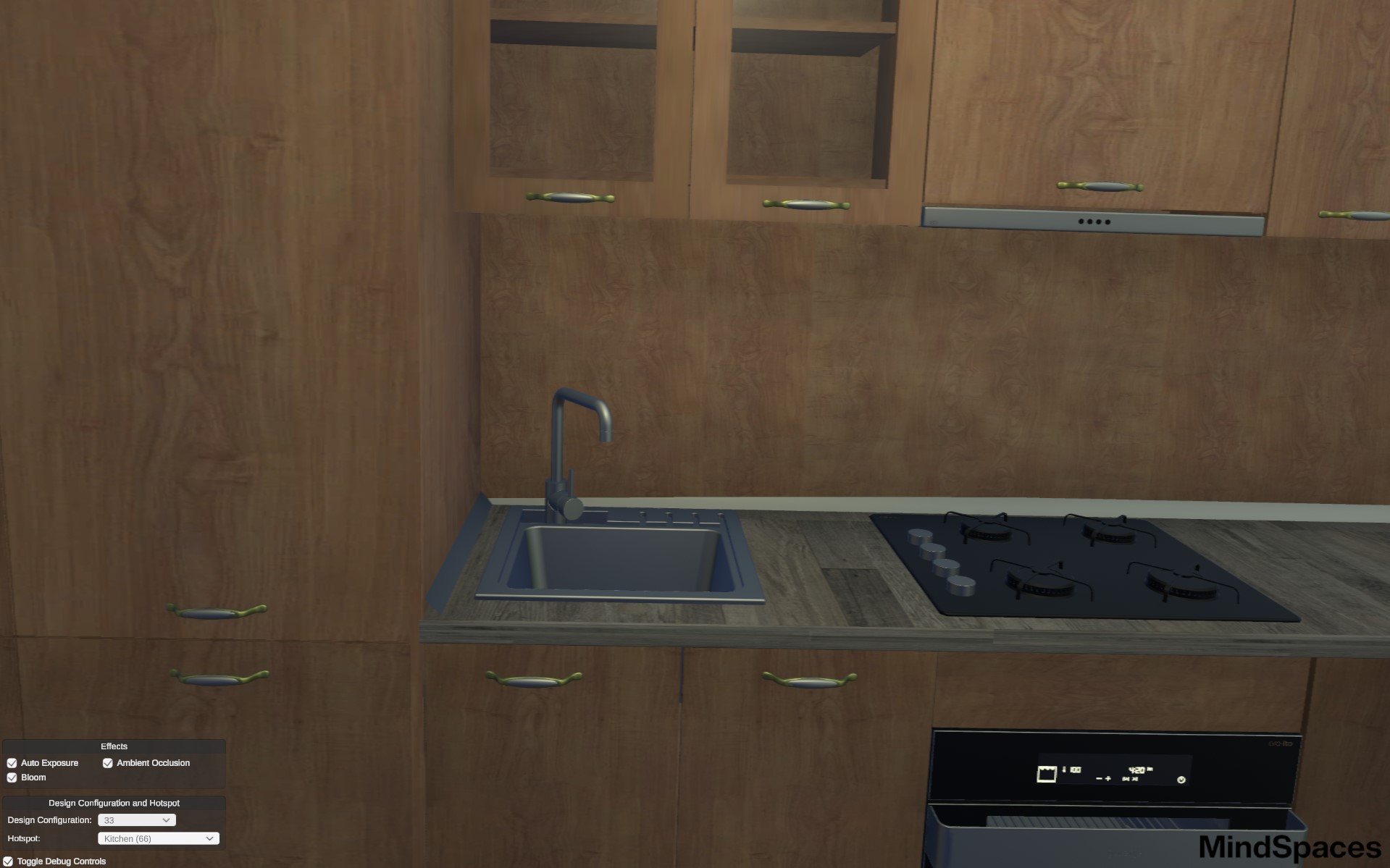

Use Case 1 — Experimental Study on Office Layouts Workplaces.ai

For the experimental investigation of workspaces I created the full application enabling participants to explore various office layouts select their preferred design and evaluate each environment based on multiple criteria such as job satisfaction cognitive load aesthetic impression and efficiency of movement paths. During testing medical biomarkers such as heart rate and skin conductance motion data including walking routes and durations and visual measurements like gaze fixation points and objects in the field of view were simultaneously recorded. These multidimensional datasets are used to identify optimal and highly adaptable work environments particularly focusing on short movement distances clear spatial layout and overall comfort. Through quantitative analysis of these metrics the most effective room concepts can be precisely determined even before any physical renovation takes place.

Use Case 2

In Use Case 2 I developed the interactive visualizations for interior and campus designs as well as the multi user environment on a fictional Greek island ensuring that all applications were fully functional user friendly and optimized for their respective test groups seniors and architecture students.

Skills & Technologies:

Screenshots:

Videos:

The project developed a mobile application in which migrants could create their own avatar that was......

WELCOME

Description:

The project developed a mobile application in which migrants could create their own avatar that was then used within an immersive virtual reality environment. In this VR setting, several mini games were available: a geography puzzle that introduced new residents to the most important locations and regions of their host country in an engaging way a vocabulary puzzle that taught essential words and expressions and a roleplay simulation focused on job applications where users gained practical insight into required documents and typical questions posed by employers. By combining a personalized avatar interactive VR and linguistically and culturally adapted learning content the project offered a hands on and motivating approach to support migrants in registration orientation language acquisition integration and social participation. At the same time decision makers in public administrations were provided with data driven analytics and decision support tools to effectively guide and improve the integration process.

Tasks:

I took over the project from my predecessor as Team Lead In this role I supervised the developers and artists monitored progress and addressed emerging issues such as performance challenges or UX questions As in all projects I was responsible for the final quality assurance and delivered the application.

Skills & Technologies:

As part of this project a first simple demonstration prototype for a VR simulation system was......

BUNDESWEHR

Description:

As part of this project a first simple demonstration prototype for a VR simulation system was conceived and developed to enable civilian emergency responders police officers and military units to practice preparing for terrorist attacks and emergency scenarios in a realistic setting. The simulation supports situational assessment rapid triage and operational response under high stress conditions allowing personnel to train their decision making and coordination in authentic environments.

Tasks:

As part of this project I served as the sole developer and worked alongside an artist to implement the VR simulation system in Unreal Engine within approximately two days. All core functionalities—including real time situational assessment triage mechanics and the rendering of dynamic emergency scenarios—were rapidly developed and tailored to meet the specific needs of emergency responders police forces and military units.

Skills & Technologies:

Fantasy Fitness Fights VR is a virtual roguelite exergame that transports players into a magical......

FANTASY FITNESS FIGHTS

Links:

Description:

Fantasy Fitness Fights VR is a virtual roguelite exergame that transports players into a magical world full of adventure where they battle enemies using dynamic hand and body movements The fully gesture based control system allows players to defend themselves wield weapons and cast spells every hit or defensive maneuver requiring a physical motion that simultaneously promotes fitness and coordination The game aims to proactively combat sedentary behavior and motivate gamers of all skill levels—from casual players to hardcore enthusiasts—to stay fit and healthy by overcoming physically demanding challenges in an engaging way Through action packed gameplay diverse enemies and a progressive reward system Fantasy Fitness Fights VR uniquely combines fun adventure and physical activity into an immersive VR experience.

Tasks:

I was significantly involved in the prototyping of the project with a strong focus on motion recognition During the early development phases we evaluated multiple approaches In addition to traditional acceleration pattern recognition feature based classifiers using gyroscope and accelerometer data we also implemented modern machine learning methods ranging from simple decision trees to deep neural networks trained on time series data.

Skills & Technologies:

Screenshots:

Videos:

DIVAN Kids is an innovative web based app that provides evidence based therapy and......

DIVAN KIDS

Links:

Description:

DIVAN Kids is an innovative web based app that provides evidence based therapy and psychoeducational content for children aged seven to twelve with anxiety disorders wrapped in engaging gamified experiences To complement the app a parent and therapist portal offers transparent monitoring The entire development process follows a user centered iterative approach and is funded by a federal grant over three years.

Tasks:

In the early phase of the DIVAN Kids project I provided advisory support to the team on the strategic alignment of the technology and hardware architecture My focus centered on selecting the most appropriate delivery medium—whether VR mobile app desktop application or web platform—to deliver the optimal user experience for children parents and psychotherapists In addition I advised the project on the technical integration between the parent therapist portal and the core gaming application ensuring seamless data flow and interaction while fully complying with privacy requirements

In the initial stages I contributed to developing a deployment concept for clinicians and patients by designing scenarios for integrating the app into outpatient therapy sessions and daily routines of children to enhance effectiveness and acceptance

I formulated specific use cases illustrating how the app can be incorporated into therapeutic interventions and everyday life to boost engagement and adherence I also contributed creative ideas for playful elements—from mini games and narrative storylines to reward systems—that later formed the foundation of the gamification components

After the decision was made to implement the application as a web app I stepped back from active involvement in the project to allow other team members to lead the subsequent development and implementation phases

Skills & Technologies:

The COMPASS project aims to fundamentally improve navigation in minimally invasive surgery through......

COMPASS

Description:

The COMPASS project aims to fundamentally improve navigation in minimally invasive surgery through an intelligent assistance system Due to the limited field of view during endoscopic procedures surgeons often encounter technical and cognitive limitations By leveraging 3D endoscopy and stereoscopic image processing a dynamic patient specific anatomical map is generated This map is rendered within a virtual reality environment enabling immersive and intuitive orientation inside the body The map displays real time information on relevant anatomical structures directional guidance and suggestions for the next surgical steps continuously adapting to the current position and movement of the endoscope The VR based visualization enhances spatial understanding and simplifies navigation in hard to visualize regions By combining intelligent visualization with interactive guidance operational precision is increased and the cognitive load on the surgical team is reduced The ultimate goal is to sustainably enhance the safety efficiency and adoption of minimally invasive procedures through the integration of such advanced assistance systems.

Tasks:

Three months before the completion of the COMPASS project I assumed technical leadership and took full responsibility for the development process during its final phase In close collaboration with the lead developer I strategically coordinated all activities and focused them on the timely delivery of the system My primary focus was on providing technical guidance during implementation and conducting systematic troubleshooting and error resolution Through analyzing existing issues and developing practical solutions we stabilized core functionalities and successfully completed the integration of all components.

Skills & Technologies:

SMART EAT connects public health research VR AR software development gamification and social......

SMART EAT

Links:

Description:

SMART EAT connects public health research VR AR software development gamification and social psychology to provide students with interactive learning environments that integrate nutritional science with modern technologies In a VR headset adolescents enter a virtual supermarket make decisions about products packaging and societal influences and then receive personalized feedback promoting healthier and more sustainable choices such as Nutri Score and ecological labels.

Tasks:

The project is currently in the preproduction phase During this stage my focus lies on requirements analysis scenario development and the design of the software architecture Through close collaboration with subject matter experts from public health VR AR engineering gamification and social psychology I ensure that functional and nonfunctional requirements are clearly defined learning and decision scenarios are logically modeled and the technical foundation for future implementation is robustly established.

Skills & Technologies:

DigiEat develops behaviorally grounded VR scenarios that realistically simulate social eating......

DIGI EAT

Description:

DigiEat develops behaviorally grounded VR scenarios that realistically simulate social eating situations and enhance immersion through targeted olfactory stimuli scent based therapy modules are enabled by synchronizing VR experiences with scent delivery devices allowing patients to perform intensive acute and maintenance exercises both during outpatient therapy and at home reducing waiting times and improving long term treatment outcomes the combination of VR simulations and scent technology bridges the existing gap between conventional behavioral therapy and digital support.

Tasks:

My team was tasked with taking over the development of the VR application we performed preparatory work and adapted our existing system originally developed for ReliVR however the design and elaboration of the scenarios have not yet been initiated.

Skills & Technologies:

REEVALUATE is a Horizon Europe project that develops a holistic solution for managing the......

REEVALUATE

Description:

REEVALUATE is a Horizon Europe project that develops a holistic solution for managing the digitization of cultural heritage (CH). The goal is to foster collaboration enhance creative reuse and enable democratic and inclusive prioritization and contextualization. Following an initial analysis of existing weaknesses and opportunities in CH digitization a modular framework will be created that covers all phases of the lifecycle of a digitalized artifact from prioritization and contextualization to secure storage collaboration and reuse. This framework is supported by a set of technological enablers whose open versions will be provided by the consortium for integration into the European Cultural Heritage and Creative Economy ECCCH. At its core REEVALUATE empowers all stakeholders to express their perspectives on artifacts and effectively reuse them. Artificial intelligence is applied throughout the entire process supporting manual contextualization improving prioritization through public sensor technologies visualizing ideas for creative reuse suggesting collaborations and use cases and verifying the contextual integrity of reused objects. The framework also ensures secure storage of digital artifacts and manages intellectual property rights through tokenization of CH objects and the implementation of smart contracts for reuse. Additionally it provides a standardized semantic representation of metadata to enhance accessibility discoverability and automated detection of misuse of digitized CH objects. The individual enablers of REEVALUATE can be adopted individually or as an integrated suite depending on the needs of cultural heritage institutions. Overall the project delivers to the European CH sector a comprehensive toolkit that enables effective management of digital collections opens the process to society and promotes the creation of social value through creative reuse of cultural heritage.

Tasks:

I was actively involved during the planning phase of the REEVALUATE project in a brief advisory capacity. I supported the project with my expertise in gamification and developed ideas for mini games designed for demonstration purposes that engage users in interactive and appealing ways with digitized cultural heritage objects.

Skills & Technologies:

COOLCUT

The COOLCUT project is a three year initiative dedicated to developing a highly precise minimally......

COOLCUT

Description:

The COOLCUT project is a three year initiative dedicated to developing a highly precise minimally invasive laser system for the removal of bone tumors in the jaw and facial skull region. The surgery is accompanied in real time by laser spectroscopic tumor detection and supported by a robot assisted navigation system.

Key partners include the Fraunhofer Institute for Laser Technology ILT the Department of Oral Maxillofacial Surgery at RWTH Aachen University Hospital and EdgeWave GmbH. Nuromedia GmbH is responsible for AI driven medical image processing and surgical planning. In addition Nuromedia is developing a virtual reality based navigation and control system that enhances surgical precision as well as an AI supported adaptation of robotic assistance that adjusts laser cutting parameters in real time.

The outcome will be a fully integrated system that minimizes structural damage shortens treatment time and significantly improves the success rate in treating maxillofacial tumors.

Tasks:

I was briefly involved at the start of the project in the implementation planning, with my focus on the design of the navigation system.

Skills & Technologies:

Portals United is a comprehensive online platform for developers working with the World Builder and......

Portals United

Links:

Description:

Portals United is a comprehensive online platform for developers working with the World Builder and Portal Hopper ecosystem The website offers an interactive forum where programmers can ask questions share code snippets and collaborate on projects together The forum is complemented by extensive documentation and step by step tutorials that make the use of the World Builder the Portal Hoppers and related VR technologies such as VRWeb transparent and accessible In addition key components of VRWeb have been explained and the VRML Protocol Definition has been published so that developers receive clear guidelines for communication between virtual worlds and their underlying infrastructure Portals United thus serves as a central knowledge and collaboration hub for the VR community.

Tasks:

I built the entire website from the ground up designing and implementing the structure of the developer forum as well as the pages for documentation and tutorials on the World Builder and Portal Hopper Together with my team we populated the content documented the VRWeb modules and published the VRML Protocol Definition In the forum we actively answered questions provided ongoing support and fostered collaboration among developers.

Skills & Technologies:

VRWeb is currently in the conceptualization phase and builds upon a wide range of research and......

VRWeb

Links:

Description:

VRWeb is currently in the conceptualization phase and builds upon a wide range of research and funding initiatives The goal is to create a decentralized metaverse that unifies entertainment education and healthcare on a single democratically governed platform where no single actor owns the system By integrating the new web paradigm Web 3.0 a privacy centric framework emerges in which content is distributed across nodes age ratings are dynamically managed and a transparent payment system operates.

A central idea of the project is to use VR and AR to transform content that has traditionally been presented exclusively in two dimensional spaces on screens into immersive three dimensional environments This creates an immersive value add that draws user attention more deeply toward 3D content and enables new learning entertainment and therapeutic experiences This technology will be embedded as a core component within the Distributed Metaverse to enable seamless transitions between conventional 2D displays and immersive 3D spaces.

Tasks:

I played a key role in developing the VRWeb concept from initial ideation to defining the VR AR data privacy and payment systems I collaborated closely with research and funding partners to translate the vision of a democratic 3D centered metaverse into concrete project planning.

Skills & Technologies:

VRML is an XML based file format that specifies how a location should be displayed within a virtual......

VRML

Links:

Description:

VRML is an XML based file format that specifies how a location should be displayed within a virtual reality environment Supporting software particularly the HOPPER protocol handler reads the file loads the referenced content and delivers the described experience.

The original version 1.0 could not be used universally or in a platform independent way because it relied too heavily on existing software systems Version 2.0 was developed as an open extensible standard protocol to address these limitations and incorporate insights from recent years.

VRML v2.0 provides a structured legally secure and extensible format that reliably governs the display of VR content.

A streaming variant of this format is currently under development to enable continuous data stream based rendering of VR content.

Tasks:

Together with a colleague I designed refined and brought the VRML standard to production readiness We developed the specification defined implementation guidelines and tested compatibility with existing HOPPER implementations ensuring that the format can now be reliably used in production grade VR applications.

Skills & Technologies:

For Westnetz we developed several training and educational applications including a crisis......

WESTNETZ

Description:

For Westnetz we developed several training and educational applications including a crisis simulation for a substation and an introductory learning module for the new digital local network stations. The first application focuses on procedures and communication while the second application emphasizes the new technology.

These projects are presented in this article together with another project partner the University of Wuppertal see the images below.

Tasks:

I designed the code architecture and developed most of the systems myself. Notable contributions include a dialogue system for phone and NPC conversations evaluation systems for user actions and a complete power and data simulation of a digital local network station.

Skills & Technologies:

Screenshots:

KlimaDigits was developed for EnergieArgentur.NRW based on the Unreal engine for the Pico G2. The......

KLIMA DIGITS

Description:

KlimaDigits was developed for EnergieArgentur.NRW based on the Unreal engine for the Pico G2. The target group are school classes from grades 7-10 in North Rhine-Westphalia. The topic of this edutainment application is the energy transition and the digitization of the power grid. In addition to the application, an exercise book was developed, which the students should work with in teams of 2 together in combination with a VR-Headset. The tasks can only be solved together. One of the students receives information in VR and has to pass it on to the partner so that he can solve the question in the exercise book. In the middle of the application, the students swap their "places" and the other becomes a VR user, while the other has to work with the exercise book.

Tasks:

In this project, I was responsible for the implementation of 2 chapters and the support of the employees in the implementation of the other chapters. I advised them on troubleshooting as well as in the area of software architecture.

Skills & Technologies:

Screenshots:

We developed a VR trade show showcase (Oculus Rift S) for the Bundesministerium für Ernährung und......

Grüne Woche

Description:

We developed a VR trade show showcase (Oculus Rift S) for the Bundesministerium für Ernährung und Landwirtschaft (BMEL / Federal Ministry of Food and Agriculture). In addition to the clear purpose as trade fair entertainment, the difference between official and counterfeit food seals should be taught to consumers. My share was primarily the integration of the storyline and the interactions with the world. The project was designed for Roomscale VR with real objects in the tracking area. Virtual objects had to be placed in a physical shopping cart.

Skills & Technologies:

Screenshots:

For the insurance company AXA I developed a VR application (Oculus Go) at senselab.io for a......

AXA CyberSecurity

Description:

For the insurance company AXA I developed a VR application (Oculus Go) at senselab.io for a nationwide roadshow. The aim was to provide a humorous and entertaining introduction to IT security and the associated AXA products within a very short time. In addition to my work as a project lead, I was largely responsible for the staging and animation. I implemented this using timelines and keyframe animations in Unity.

Skills & Technologies:

Screenshots:

As part of the BAUMA trade fair we developed a multi user VR experience for Messe München using......

BAUMA

Links:

Description:

As part of the BAUMA trade fair we developed a multi user VR experience for Messe München using HTC Vive Pro with wireless capability. The goal was to introduce visitors to the possibilities of virtual reality while showcasing the products of our sponsors. The BAUMA VR experience was a room scale application where four users one guide and three visitors jointly explored a virtual construction site. Users interacted with the environment through hand tracking using Leap Motion. Immersion was further enhanced by a vibration platform and multiple wind machines. In addition to the primary VR modes for the guide and participants we also developed an operator mode and a spectator mode. Both modes were successfully deployed at the trade fair as well.

Tasks:

My main responsibilities on this project included the network stack the development of the mode system the DOKA assembly animation created using keyframes in Unity the integration of promotional videos for UVEX and the spectator mode which was implemented as a television broadcast featuring multiple camera angles and movements using Cinemachine.

Skills & Technologies:

Screenshots:

„Schaltbefähigung“ is a training application honored with the Immersive Learning Award 2018,......

TÜV Süd - Schaltbefähigung

Links:

Description:

„Schaltbefähigung“ is a training application honored with the Immersive Learning Award 2018, developed in collaboration with TÜV SÜD Akademie. It is successfully used in classroom training to conduct virtual examination tasks such as operating electrical installations or de energizing systems.

During the training, highly dangerous scenarios can be practiced completely risk free, adding significant value beyond its efficiency and time savings..

Skills & Technologies:

Screenshots:

Using the VR application HV deenergizing of Electric Vehicles, key procedures for the safe......

TÜV Süd - HV-Freischalten

Description:

Using the VR application HV deenergizing of Electric Vehicles, key procedures for the safe maintenance of electric vehicles are trained. Automotive workshops across Germany can quickly and cost effectively train their staff for repairing electric cars.

The realistic depiction of a widely used hybrid vehicle enables practical training without exposing trainees to the dangers posed by a real high voltage system. The selection of electric vehicles is continuously expanded because the required work steps vary depending on the make and model.

Skills & Technologies:

Screenshots:

Valnir Rok is an online sandbox survival RPG inspired by Norse mythology. Players awaken on the......

Valnir Rok

Description:

Valnir Rok is an online sandbox survival RPG inspired by Norse mythology. Players awaken on the island of Valnir and must do everything possible to survive in a land teeming with wild animals, mythical creatures, and ruthless humans.

Tasks:

One of my first responsibilities at Encurio was to develop a completely new combat system. The development of this system required creating a new network layer because the old one could no longer handle the existing slow paced gameplay. Due to tight deadlines of just four weeks, only critical data movement and combat were migrated to the new layer. We successfully reached the milestone for Battle for Valhalla, a battle royale game built on the existing MMORPG Valnir Rok. However, the publisher decided to end the project because they lost confidence in the economic potential of a battle royale format. This decision was not due to our work, which they found excellent. As a result, the new combat system was integrated into Valnir Rok over the next five weeks. In addition, we resolved numerous bugs and greatly improved the game’s performance, especially through a new world streamer with an advanced grass rendering system and a mesh combining system.

Skills & Technologies:

Screenshots:

Faith & Honour: Barbarossa is a historically authentic Adventure RPG set during the crusades of......

Faith + Honor: Barbarossa

Links:

Description:

Faith & Honour: Barbarossa is a historically authentic Adventure RPG set during the crusades of Frederick I. Barbarossa.

Tasks:

After the update for Valnir Rok I started with the concept and development for a new game, for which we had received funding from the Film und Medienstiftung NRW. Faith + Honor: Barbarossa is a historical Action-Adventure-RPG with turn-based tactical combat. The player follows the main character through an adventure during the time of Barbarossa and travels through the world of the High Middle Ages. He follows the crusaders into the holy land. The main goal for the project is to make the RPG elements and the tactical fight as beginner-friendly as possible and to reach a playthrough time of about 12-20 hours. I have started to develop the core systems for the game. In addition to developing gameplay concepts, I was also responsible for the technical concept development and the evaluation of engines and tools.

Skills & Technologies:

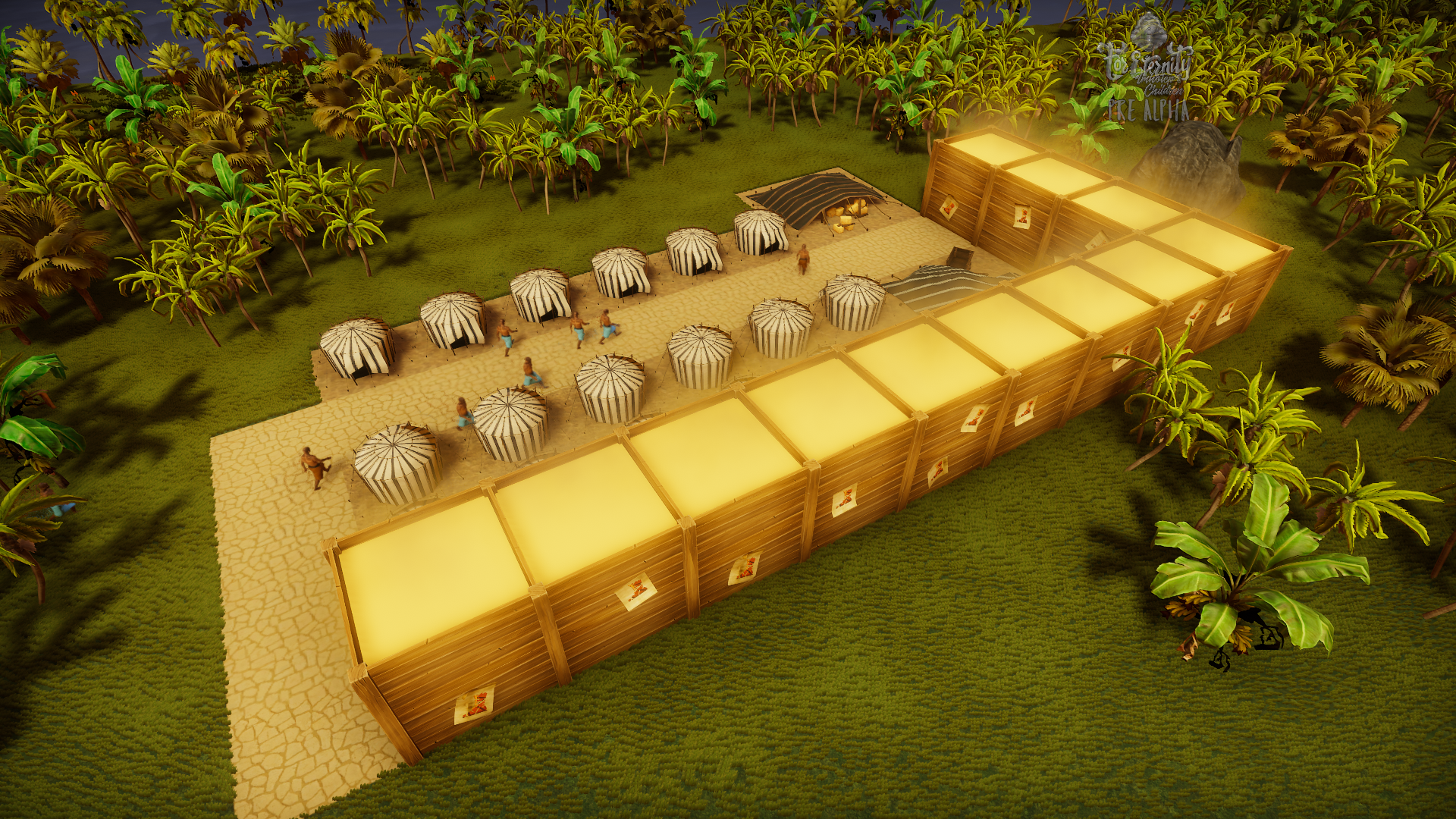

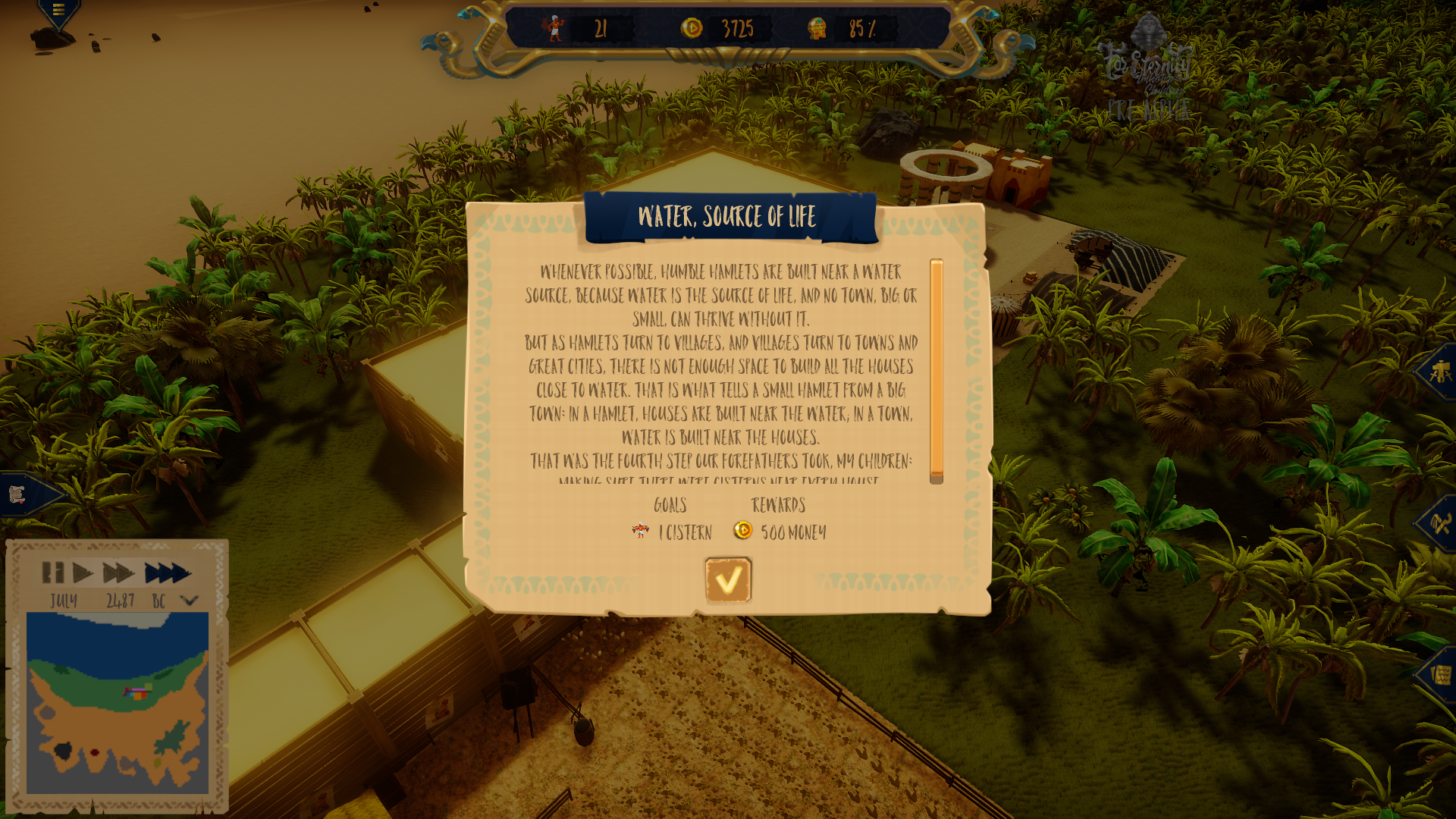

A private project bears the name "For Eternity - Imhotep's Children". It is a city simulation based......

For Eternity - Imhotep's Children

Description:

A private project bears the name "For Eternity - Imhotep's Children". It is a city simulation based in ancient Egypt during the golden age of pyramid construction.

Tasks:

This is a list of the main systems planned for the game:

- Settings-System (implemented)

- Localization-System (implemented)

- Audio-System (implemented)

- Input-System (implemented)

- Camera-Control-System (implemented)

- World/Map System (implemented)

- Buildsystem including Building-Behavior-System (implemented)

- Transport-System (implemented)

- Trading-System with supply and demand (implemented)

- Population-System including NPC-AI-Control-System (implemented)

- Statistics-System (implemented)

- Quest-System (implemented)

- Event-System for Gameplay-Events

- Save and Load System

In the context of this game I developed the story, quest and events and wrote it together with an author. In addition, I have managed the creation of assets by freelancers, e.g. Music, voice-over, UI elements and more. These manufactured assets were then added by me into the Unity project and connected to the systems I programmed. No one else, except me, has been working on the project within Unity.

Skills & Technologies:

Screenshots: